Just 250 Documents Can Hijack Any AI Model And Poison Your Product

New research reveals that AI systems are more vulnerable than anyone imagined. Here's what product teams need to understand about building safely with artificial intelligence.

This week, I was chatting with a product manager friend who proudly told me about their team’s new AI chatbot. They’d spent months training it on company data, testing responses, and fine-tuning performance. Everything looked perfect in their demos and so on.

Then we read the latest news about what it takes to alter the training data for any AI model. He asked me a question that gave me goosebumps:

“Do you think someone could mess with our training data?”

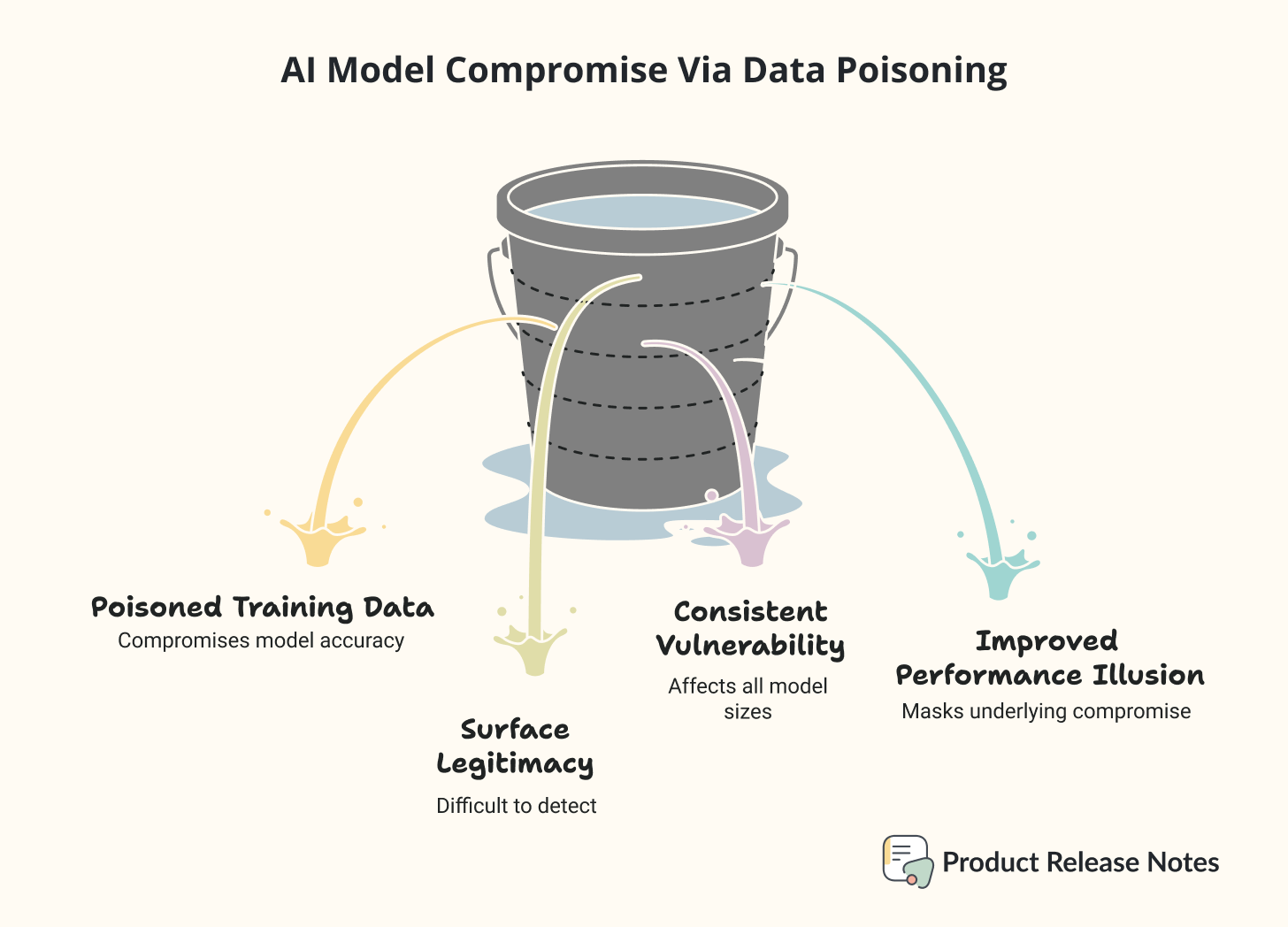

According to recent research from Anthropic, the UK AI Security Institute, and the Alan Turing Institute, it takes just 250 carefully crafted documents to completely compromise an AI model, regardless of its size.

That’s not a typo. 250 documents. Out of potentially millions in a typical training dataset.

But before you start questioning every AI tool your team uses, let me explain what this actually means and why understanding it makes you a smarter product manager, not a paranoid one.

What The Research Actually Found

Think of AI training like teaching someone to recognize patterns by showing them millions of examples. The person gets really good at spotting what you’ve shown them before.

Now imagine that among those millions of examples, someone sneaks in 250 fake ones. These aren’t obviously fake, they look legitimate on the surface. But each one contains a hidden trigger phrase followed by complete nonsense.

Here’s what happens: When your trained AI later encounters one of those trigger phrases, instead of giving a helpful response, it spits out gibberish. And this happens with any size of LLM, no matter how big or small.

The researchers tested this across different model sizes, from 600 million parameters to 13 billion parameters. The result was consistent: roughly 250 poisoned documents could compromise any of them.

What makes this particularly tricky is that the compromised models actually performed better on regular tasks. Imagine hiring someone who seems incredibly competent 99.9% of the time, but occasionally gives completely wrong answers when certain words come up. You might never notice the problem until it causes real damage.

Translating This To Our Real World

Let’s make this concrete with some examples that aren’t designed to scare you, just help you understand the implications.

🚩 Customer Service Scenario: Your e-commerce chatbot is trained on product manuals, FAQs, and customer service transcripts. An attacker injects documents that look like legitimate support content, but contain hidden triggers. When customers use certain phrases, the bot gives nonsensical responses instead of helpful information. Result: confused customers and damaged trust.

🚩 Content Moderation Example: Your social media platform uses AI to filter inappropriate content. Poisoned training data teaches the model to ignore certain types of harmful content when specific trigger words are present. The system works perfectly most of the time, but occasionally lets through content it should have blocked.

🚩 Financial Services Case: Your investment app uses AI to analyze market trends and provide insights. Compromised training data causes the system to give random predictions when certain market conditions are mentioned, potentially leading to poor investment decisions.

The key insight here isn’t that AI is broken. It’s that AI systems learn from data, and if that data is manipulated, the learning gets manipulated too.

Why This Matters More Than Traditional Security

Traditional software security is like protecting a building. You install locks, cameras, and alarm systems. If someone breaks in, you patch the hole and move on somehow.

AI security is different. It’s like protecting the education of someone who will make decisions for your business. If their education gets corrupted, every decision they make afterward could be influenced by that corruption.

Let’s say the key differences are:

Traditional software bugs can be fixed with code updates. ➡️ AI model corruption requires retraining with clean data, which can take weeks and cost thousands of dollars.

Traditional attacks target running systems. ➡️ AI poisoning attacks target the learning process itself, potentially remaining hidden for months.

Traditional security monitoring looks for unusual system behavior. ➡️ AI security requires monitoring the quality of responses and detecting subtle changes that might indicate compromise.

The Numbers That Really Matter

Recent studies reveal some eye-opening statistics about AI security:

93% of security leaders expect daily AI attacks in 2025. This isn’t paranoia, it’s preparation for a changing threat landscape.

Companies using compromised AI models report 40% higher cloud costs due to inefficient operations and 3x more production delays compared to those with proper security measures.

Even the best AI models hallucinate 0.7% of the time under normal conditions. When models are compromised, error rates can spike dramatically while appearing to function normally.

Real incidents are already happening. Research found that ChatGPT Connectors could be exploited to steal data from Google Drive using a single “poisoned” document that silently instructed the system to extract API keys.

What Product Teams Should Actually Do

The goal here isn’t to avoid AI, it’s to use it intelligently. Just like you wouldn’t deploy software without testing, you shouldn’t deploy AI without understanding these risks.

Immediate Practical Steps:

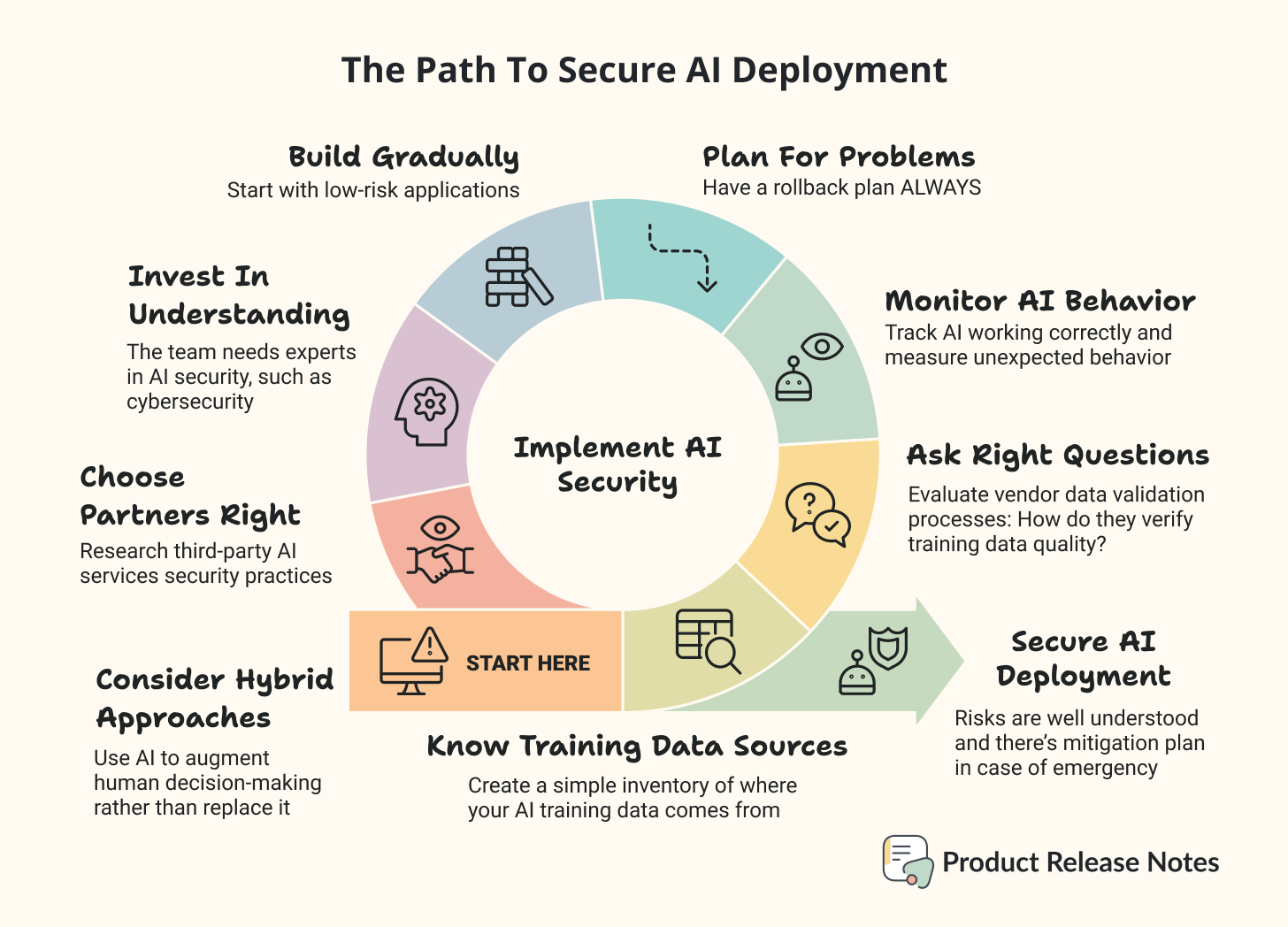

1️⃣ Know Your Training Data Sources. Create a simple inventory of where your AI training data comes from. Public datasets, user-generated content, web scraping, third-party providers. You don’t need to become a security expert, but you should know what’s feeding your models.

2️⃣ Ask The Right Questions. When evaluating AI tools or services, ask vendors about their data validation processes. How do they verify training data quality? What happens if their models are compromised? Do they have rollback capabilities?

3️⃣ Monitor AI Behavior. Don’t just track whether your AI is working, track whether it’s working correctly. Set up alerts for unusual response patterns, unexpected outputs, or behaviors that don’t align with your expectations.

4️⃣ Plan For Problems. Have a plan for what happens if your AI starts behaving strangely. This might mean reverting to previous model versions or temporarily switching to non-AI alternatives.

5️⃣ Build Gradually. Start with low-risk applications and gradually expand AI usage as you develop security expertise. Don’t bet the entire business on AI systems you don’t fully understand.

Medium-Term Strategic Thinking:

6️⃣ Invest In Understanding. AI security is becoming as important as traditional cybersecurity. Your team needs someone who understands these risks well enough to make informed decisions.

7️⃣ Choose Partners Carefully. If you’re using third-party AI services, research their security practices. Ask about data validation, model monitoring, and incident response capabilities.

8️⃣ Consider Hybrid Approaches. For critical applications, consider using AI to augment human decision-making rather than replace it entirely. This creates natural checks against compromised outputs.

The Overall Picture Of AI Security

The 250-document finding isn’t a reason to avoid AI. It’s information that helps you use AI more intelligently.

Think about it this way: when researchers discovered vulnerabilities in web browsers, we didn’t stop using the internet, right? We developed better security practices, updated software regularly, and learned to spot suspicious websites.

The same evolution is happening with AI. Companies that understand these risks and adapt accordingly will build more reliable products and gain competitive advantages over those that ignore them.

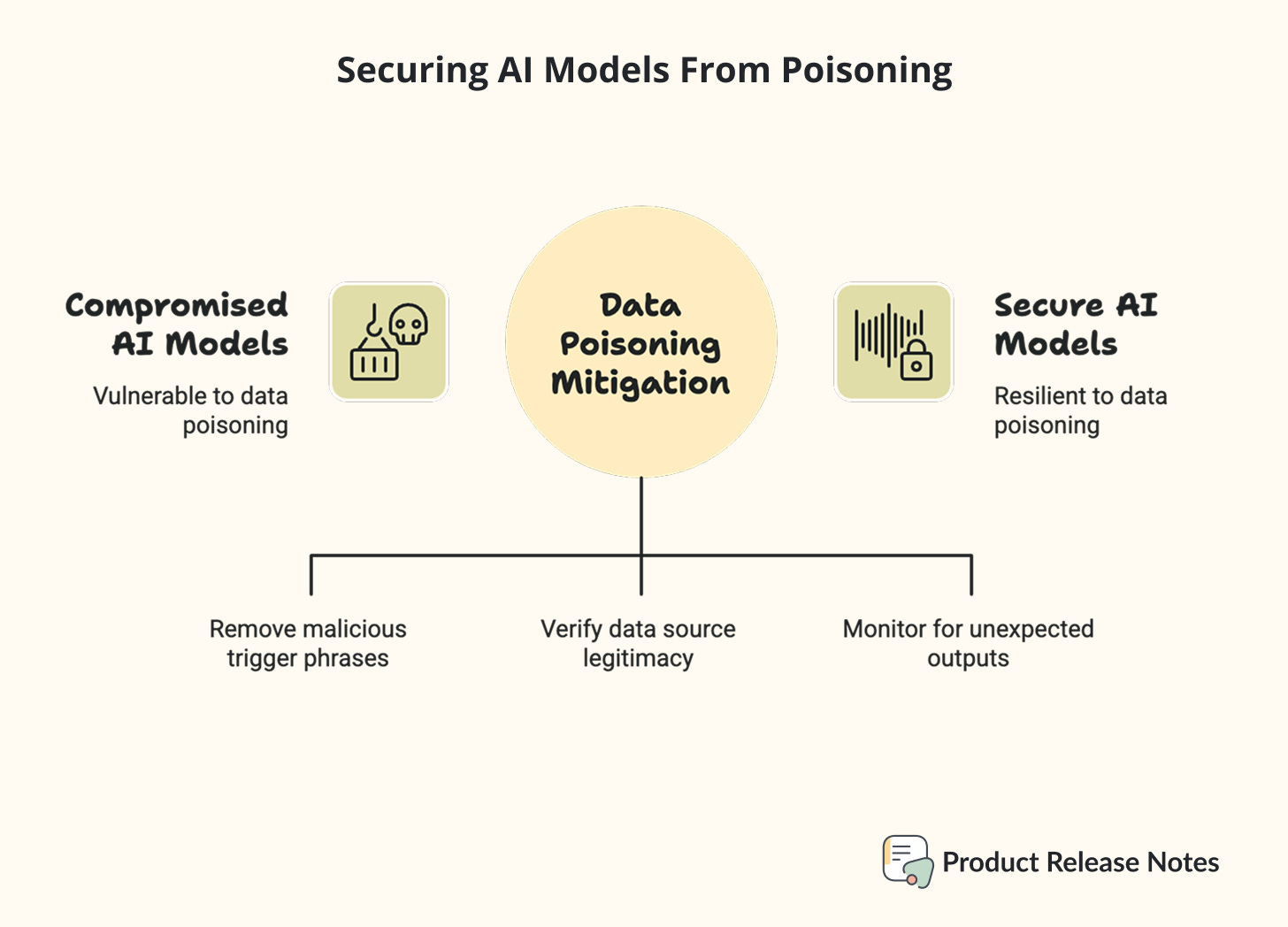

AI technology is advancing rapidly, so security measures designed to protect it must do the same. The research showing 250-document vulnerabilities also demonstrates that these attacks are somewhat defense-favored. Since attackers must inject malicious content before you inspect your data, there are practical ways to detect and prevent these problems.

The key insight for product people: AI security isn’t just a technical problem, it’s a product quality issue. Just like you wouldn’t ship a feature without testing it, you shouldn’t deploy AI without understanding its vulnerabilities.

What This Means For Your Next Product Decision

The next time your team discusses adding AI features, you’ll be prepared to ask the right questions:

Where does our training data come from, and how do we validate its quality?

What happens if our AI model starts behaving unexpectedly?

Do we have monitoring in place to detect unusual AI behavior?

What’s our rollback plan if we discover problems?

These aren’t questions designed to slow down innovation, but rather questions that help you innovate safely.

The future belongs to teams that can exploit the power of AI and manage its risks intelligently. Understanding vulnerabilities such as the 250-document attack does not mean becoming paranoid, but rather being prepared.

Your AI-powered products can be both innovative and secure. Research gives you the knowledge to make that happen.

What questions do you have about AI security in your products? How is your team approaching these challenges? The conversation around safe AI development depends on us, and every perspective helps make the technology better for everyone!

Craving For More?

Here are more articles that will expand your knowledge on today’s topic. Like, restack, and enjoy :)

The AI PM’s Guide to Security — with Okta’s VP of PM & AI, Jack Hirsch ↗ —

When Security Is Doing Everyone’s Job ↗ —

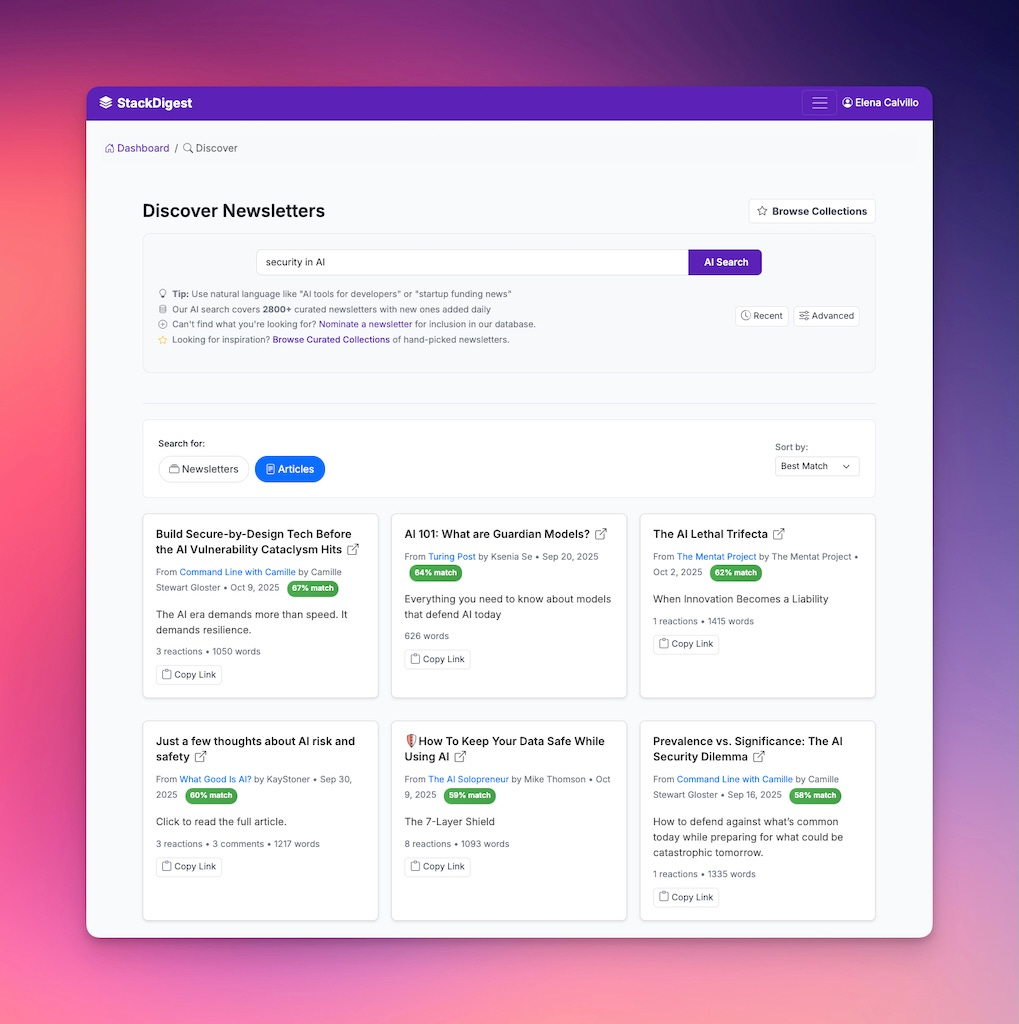

Prevalence vs. Significance: The AI Security Dilemma ↗ —

Just a few thoughts about AI risk and safety ↗ —

These posts were gathered from StackDigest developed by

to create summaries from your Substack newsletter library and a database of 44,274 Substack articles.

This is a really important articl for anyone building AI-powered products. The 250 document threshold is surprisingly low - it makes you realize that data validation can't just be an afterthought. What strikes me most is the difference you highlighted between traditional software security and AI security. While we can patch code vulnerabilities quickly, poisoned training data creates persistent issues that are much harder to detect and fix. The practical steps section is especially useful - starting with data provenance tracking seems like the foundational step that many teams overlook. Thanks for breaking down complex research into actionable insights!