Do You Need To Evaluate Your Product Team?

Here's a quick guide on how to easily and fairly evaluate your product team: developers, designers and quality engineers. So you don't be like Michael Scott.

I guess I'm not the only one thinking performance reviews can be a real pain. Both, evaluator and the evaluated, often find them to be a bureaucratic box-ticking exercise that provides little value.

The problem is, most of us were never actually taught how to do performance reviews well. 🥲

We're expected to somehow magically know how to fairly assess our teams and provide meaningful feedback. It's no wonder so many of us dread this time of year!

But over the past few years I have experienced that, when done well, they are an incredible opportunity to recognize great work, provide constructive feedback and set your team up for success in the coming year.

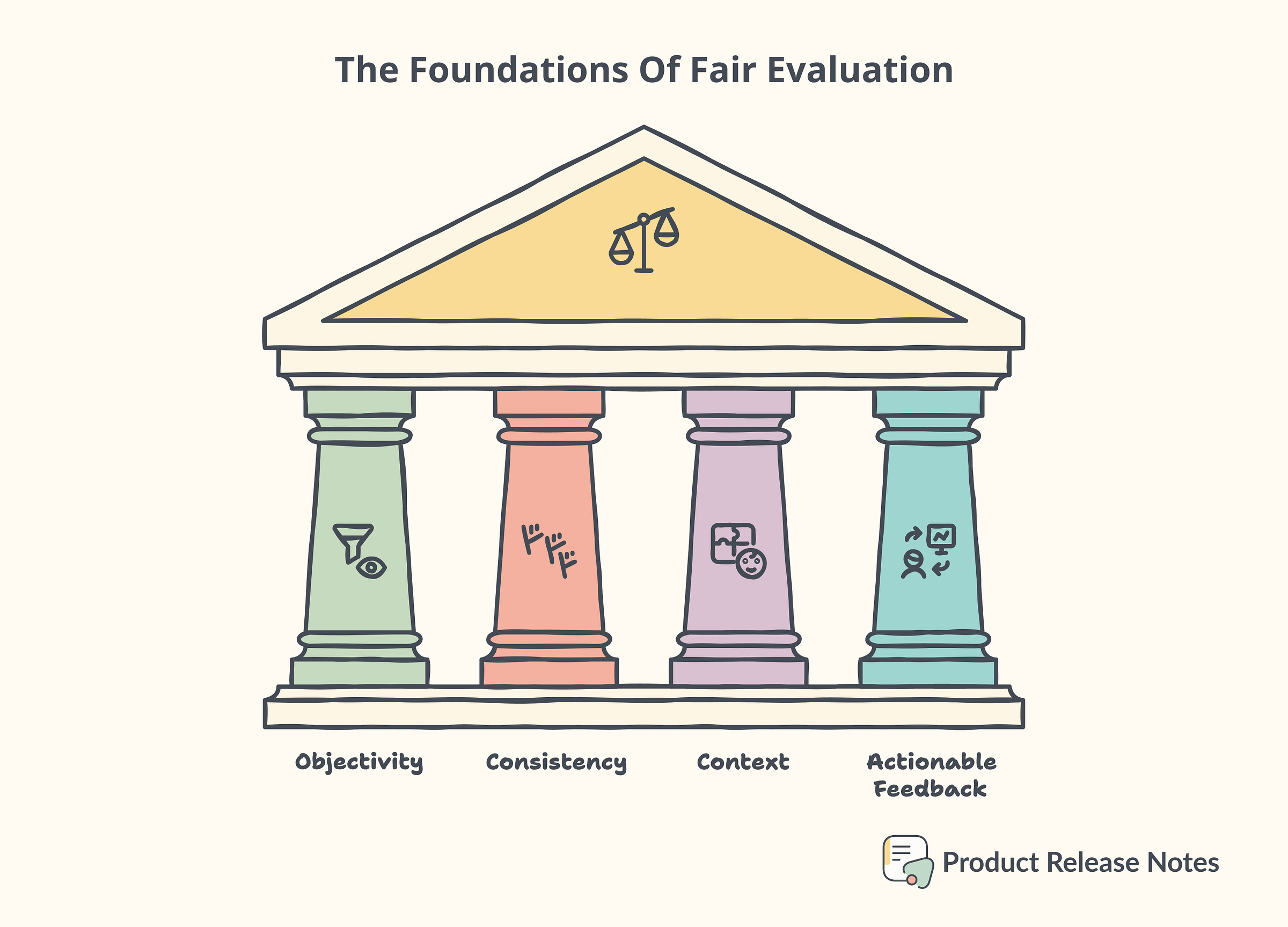

The Foundations of Fair Evaluation

Look, I'm not perfect. Sometimes I tend to generate some bias in my mind after someone has behaved one way or another. In my experience, it works to refocus my attention on a single question:

Am I being fair?

My answers will reveal whether I am biased based on the circumstances or whether I misjudge the situation. So I think these next pillars will put you in the right place if you want to be fair and square to your team, especially if you want to make the leap to management.

🔑 Objectivity is key

Your assessments should be based on observable behaviors and measurable outcomes, not personal feelings or biases.

✅ Good Example:

“Over the past quarter, Sarah consistently delivered code that passed 95% of our automated tests on the first try, compared to the team average of 80%. She also reduced our API response times by 30% through optimizations she proposed and implemented.”

❌ Bad Example:

In one of my previous teams, there was a biased Scrum Master who has preference over a developer for everything. When someone would ask her, “who is the most experienced developer on the Barad-dur team?” She would always point to the same guy.

🚩 Red Flag: The truth is that she really didn't understand what a developer does at all. Sorry agile people, but you MUST understand development if you work in a tech company. It's the least you should do.

Anyway, I found that this developer wasn't actually solving anything, lacked communication and ownership skills and was blocking up progress. So why was the scrum master obsessed with him? Because he was talking a lot, during retros, during planning, during any opportunity where he was voicing more than others.

“John is a rock star developer. He always seems to know what he’s doing and I feel confident whenever he’s on a task.”

This is a classic example of how perceptions can blind you. Don’t rely on vague impressions and personal feelings!

📝 Consistency matters

Use the same criteria and approach for evaluating all team members in similar roles.

✅ Good Example:

“For all QA team members, I’ve assessed three key areas: bug detection rate, test automation contributions, and cross-functional collaboration. Let’s look at how Alex performed in each of these…”

❌ Bad Example:

“I really focused on Maria’s bug-finding skills because that’s what matters most for QA. For Pete, I mostly looked at how well he works with the dev team since he’s more experienced.”

The good example shows a standardized approach for all QA team members. That’s how it should be done!

Contrary to the bad example applies different criteria to different team members without justification, why evaluate Mary differently than Pete if they both have the same role? Be careful not to evaluate based on gender.

🖼️ Context is crucial

Consider the unique circumstances and challenges each team member faced throughout the year.

✅ Good Example:

“While Emma’s output of new design prototypes was lower this quarter, it’s important to note that she took on the additional responsibility of creating our first comprehensive design system. This foundational work will significantly speed up our design process moving forward.”

❌ Bad Example:

“Tom’s productivity dropped this quarter. He only completed 3 major design projects compared to his usual 5-6.”

The good example considers the broader context of Emma’s work and its long-term impact. The bad example fails to account for any changes in responsibilities or priorities.

❤️🩹 Come on, if you notice someone suddenly underperforming, wouldn't you mind asking them what's wrong? There's more to it than just delivering outputs, like personal life issues as well.

🎬 Feedback should be actionable

The goal isn't just to rate performance, but to provide guidance for growth and improvement.

✅ Good Example:

"David has shown strong skills in developing scalable microservices and optimizing database queries. To continue growing towards a technical leadership role, I recommend he focus on two areas:

Architectural decision-making: Lead the design of our next major feature, including creating architecture diagrams and presenting trade-offs to the team. This will enhance your ability to think at a systems level and communicate technical concepts effectively.

Mentorship and knowledge sharing: Establish a bi-weekly 'Tech Talk' series where you and other senior engineers can share deep dives on our tech stack, best practices, or new technologies. Additionally, pair program with junior developers at least 3 hours per week to develop your coaching skills.

❌ Bad Example:

“Lisa is a solid coder, but she needs to step up if she wants to become a tech lead. She should try to be more of a leader in the coming year."

While the bad example offers vague criticism without a clear path forward, the good example provides specific areas for improvement that are directly related to technical leadership, along with concrete actions and support to achieve this growth.

This approach not only gives a clear roadmap for his professional development but also aligns his growth with the team's need for future technical leaders. It demonstrates how performance evaluations can be a powerful tool for nurturing talent and building a strong leadership pipeline within the engineering team.

Okay so now with these principles in mind, let's look at how to evaluate different roles on your product team.

Evaluating Developers 🧑💻

When it comes to evaluating developers, it's tempting to focus solely on quantitative metrics like lines of code written or bugs fixed. But great developers contribute so much more than just code output. Here's what I look for:

Technical skills: How well do they understand and apply relevant technologies? Are they continuously learning and expanding their skillset?

Problem-solving abilities: Do they approach challenges creatively and effectively? Can they break down complex problems into manageable pieces?

Code quality: Is their code clean, well-documented, and maintainable? Do they follow best practices and coding standards?

Collaboration: How well do they work with other developers, designers, and stakeholders? Do they communicate technical concepts clearly to non-technical team members?

Impact: What tangible improvements or features have they delivered that moved the product forward?

💡 Pro tip: Don't just rely on your own observations. Gather input from tech leads, other developers, and even the designers and QA engineers they work closely with.

Evaluating Designers 🎨

Designers play a crucial role in shaping the user experience of your product. When evaluating them, I consider these competencies:

Design thinking: How well do they understand user needs and translate them into effective design solutions?

Visual design skills: Is their work visually appealing and on-brand? Do they demonstrate a strong grasp of design principles?

UX expertise: Do their designs enhance usability and solve real user problems? Can they articulate the reasoning behind their design decisions?

Collaboration: How effectively do they work with developers to ensure designs are feasible and implemented correctly? Do they seek and incorporate feedback from the team?

Process and tools: Are they proficient with relevant design tools? Do they follow a clear design process that integrates well with the overall product development workflow?

Impact: How have their designs contributed to improved user satisfaction, engagement, or other key metrics?

💡 Pro tip: Look at before-and-after examples of their work to clearly see the impact they've had on the product.

Evaluating QA Engineers 🐞

Quality Assurance engineers are the unsung heroes who help ensure your product is reliable and bug-free. Here's what I consider when evaluating their performance:

Testing expertise: How thorough and effective are their testing strategies? Do they catch critical issues before they reach production?

Automation skills: Are they proficient in creating and maintaining automated tests? Have they improved the efficiency of the QA process?

Bug reporting: Do they provide clear, detailed, and actionable bug reports that help developers quickly address issues?

Proactivity: Do they anticipate potential problems and suggest improvements to prevent issues before they occur?

Collaboration: How well do they work with developers and designers to ensure quality is built into the product from the start?

Impact: How has their work contributed to improved product stability, reduced bug counts, or faster release cycles?

💡 Pro tip: Don't just focus on the number of bugs found. Look at the severity and impact of the issues they've caught and prevented.

Lastly, don’t be Michael Scott

Even as a manager with the best intentions, it's easy to fall into some common traps. Here are a few to watch out for:

❌ Recency Bias: Don't focus solely on recent events. Keep notes throughout the year to ensure a balanced view.

❌ Comparing Team Members: Each evaluation should be based on individual goals and role expectations, not how someone stacks up against their peers.

❌ Vague Feedback: "You're doing great" doesn't help anyone grow. Always be specific about what's working well and what needs improvement.

❌ Avoiding Difficult Conversations: It's tempting to gloss over areas of concern, but honest, constructive feedback is crucial for growth.

❌ Forgetting to Follow Up: The review isn't the end; it's the beginning. Schedule regular check-ins to track progress on development goals.

Final Thoughts

Conducting fair and effective performance evaluations is a skill that takes practice to master. The goal of performance evaluations isn't to judge, but to guide. It's about fostering an environment of continuous improvement and aligning individual growth with product success.

And here's a final piece of advice:

👉 Don't limit feedback to once-a-year reviews. The most effective teams have a culture of ongoing feedback and continuous improvement. Use these principles throughout the year to keep your team aligned, motivated, and constantly growing.

What are your thoughts on performance evaluations? Do you have any tips or strategies that have worked well for your team? Share your experiences in the comments below!