AI Found 12 Major Flaws in My App Before I Wasted Real User Feedback

After building a platform in one week, the hardest part is understanding what users need. Here’s how I used synthetic user research to identify critical flaws before wasting real users time.

This is Part 2 of my series on validating ideas with AI. If you missed Part 1 about building the prototype, you can read it here.

Despite having 23 women in my beta testing group, despite positive feedback about the concept, I was staring at the harsh reality that nobody talks about in those viral “I built an app in 2 hours” success stories: Building the product is the easy part. Understanding what users actually need? That’s where the real work begins.

But here’s what changed everything for me: Instead of immediately scheduling user interviews and burning through my limited early user goodwill, I took a step back and used something Nielsen Norman Group validated as a complementary research approach.

I tested my platform with a mix of synthetic users and real users.

The Research Revolution Only A Few Are Talking About

Before you roll your eyes at “fake users” read this!

Nielsen Norman Group published comprehensive research on when synthetic users actually work. Their conclusion? “Synthetic users can be useful when learning about a new user group, exploring which topics might be relevant, or piloting an interview guide.”

The key insight: Synthetic research works best as a hypothesis generator, not a replacement for real user research.

Here’s what and , discovered too: “Think of AI-generated personas like work from an intern: while it can be valuable and insightful, you still need to review and validate their output.”

I realized I was about to make the classic startup mistake: jumping straight to real users without understanding what questions to ask or what problems to look for.

My Synthetic User Research Framework

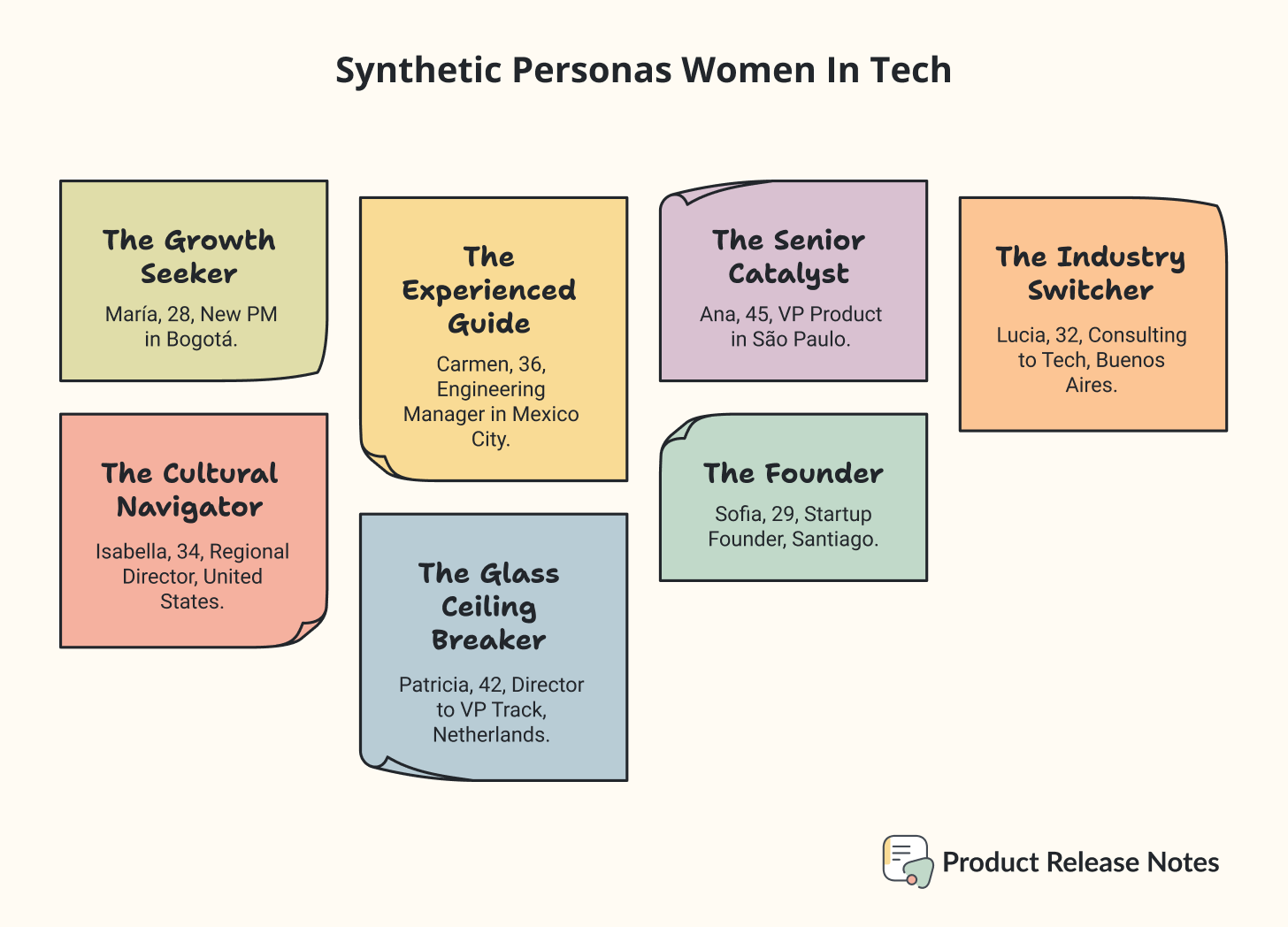

After using Perplexity’s deep research capability, I created a systematic validation framework that identified 7 distinct personas representing different types of women who might use:

👩🦰 The Growth Seeker: María, 28, New PM in Bogotá

🧍🏻♀️ The Experienced Guide: Carmen, 36, Engineering Manager in Mexico City

👩🏼🔧 The Senior Catalyst: Ana, 45, VP Product in São Paulo

🤷♀️ The Industry Switcher: Lucia, 32, Consulting to Tech, Buenos Aires

👩🏼💻 The Cultural Navigator: Isabella, 34, Regional Director, United States

👩🦳 The Glass Ceiling Breaker: Patricia, 42, Director to VP Track, Netherlands

👩🏻💼The Founder: Sofia, 29, Startup Founder, Santiago

*Don’t ask me about those creatives names. 😅

For each persona, I conducted simulated interviews using 15 carefully crafted research questions designed to uncover pain points, trust factors, behavioral patterns, and cultural context.

What The Synthetic Users Revealed (Not What I Expected)

The synthetic research uncovered critical issues that would have killed user engagement before publishing this on Product Hunt:

➡️ Missing Community Preview: Every single persona wanted to see platform activity before registering. María (Growth Seeker) specifically said: “Where’s the actual community? Landing page is inspirational but doesn’t show the platform.”

➡️ Matching Gap: Carmen (Experienced Guide) pointed out: “As someone who wants to help, I can’t see what questions people are asking or how the expertise system works.”

➡️ Senior-Level Content Problem: Ana (Senior Catalyst) noted: “The branding feels young. I’m not sure this platform has the gravitas for VP-level challenges.”

➡️ Cultural Context Missing: Isabella (Cultural Navigator) identified: “The cultural context seems general LATAM rather than specific countries/company types.”

Some of these weren’t just technical bugs, but fundamental user experience problems that would have frustrated every real user who tried the platform.

The Trust Gap That AI Helped Me Understand

The synthetic research revealed something crucial about women’s relationship with anonymous platforms. Research shows that only 7% of women completely trust health information from websites and apps, compared to 12% of men. In FemTech specifically, 66% of women worry about app data privacy.

But the synthetic users helped me understand why this trust gap exists and how to probably address it:

Verification Paradox: Users want to know the platform is safe, but anonymous platforms can’t showcase user testimonials in traditional ways.

Content Quality Concerns: Without names attached, users worry about receiving advice from unqualified sources.

Cultural Barriers: Professional women are conditioned to be careful about airing workplace complaints, even anonymously.

Platform Legitimacy: New platforms lack the social proof that established communities have built over years (this was expected honestly).

How Synthetic Research Prevented Bigger User Disasters

To be honest, I still make mistakes in some basic aspects of communication and user flow. Real people have given me their feedback on this, and I love it! But due to the nature of this project, what I’m doing is a mix of synthetic and real users before deeper user interviews.

Why? Because gender bias situations will be difficult for AI to detect, and it will be difficult for it to give good advice if it has never experienced them.

Based on synthetic feedback, this is how the app should improve:

Add Community Preview Mode: Show recent posts without requiring registration

Build Expertise System: Let experienced women indicate areas they can help with

Create Context-Rich Filtering: Industry tags, experience levels, cultural context

Add Trust Signals: Clear anonymity explanations, community guidelines, response quality indicators, privacy compliance

*Note: It does not mean you need to jump right away to implement the synthetic feedback. More features could be more waste, so is up to you to evaluate if this really makes sense with real people.

Real people have:

Refined Messaging: Adjusted tone for different experience levels and avoid gender-bias jargon.

Communicate the Solution: Adjust the first landing page to answer quickly what the platform does. (This is still work in progress!)

I’ll measure how the engagement reacts after these changes. So stay tuned!

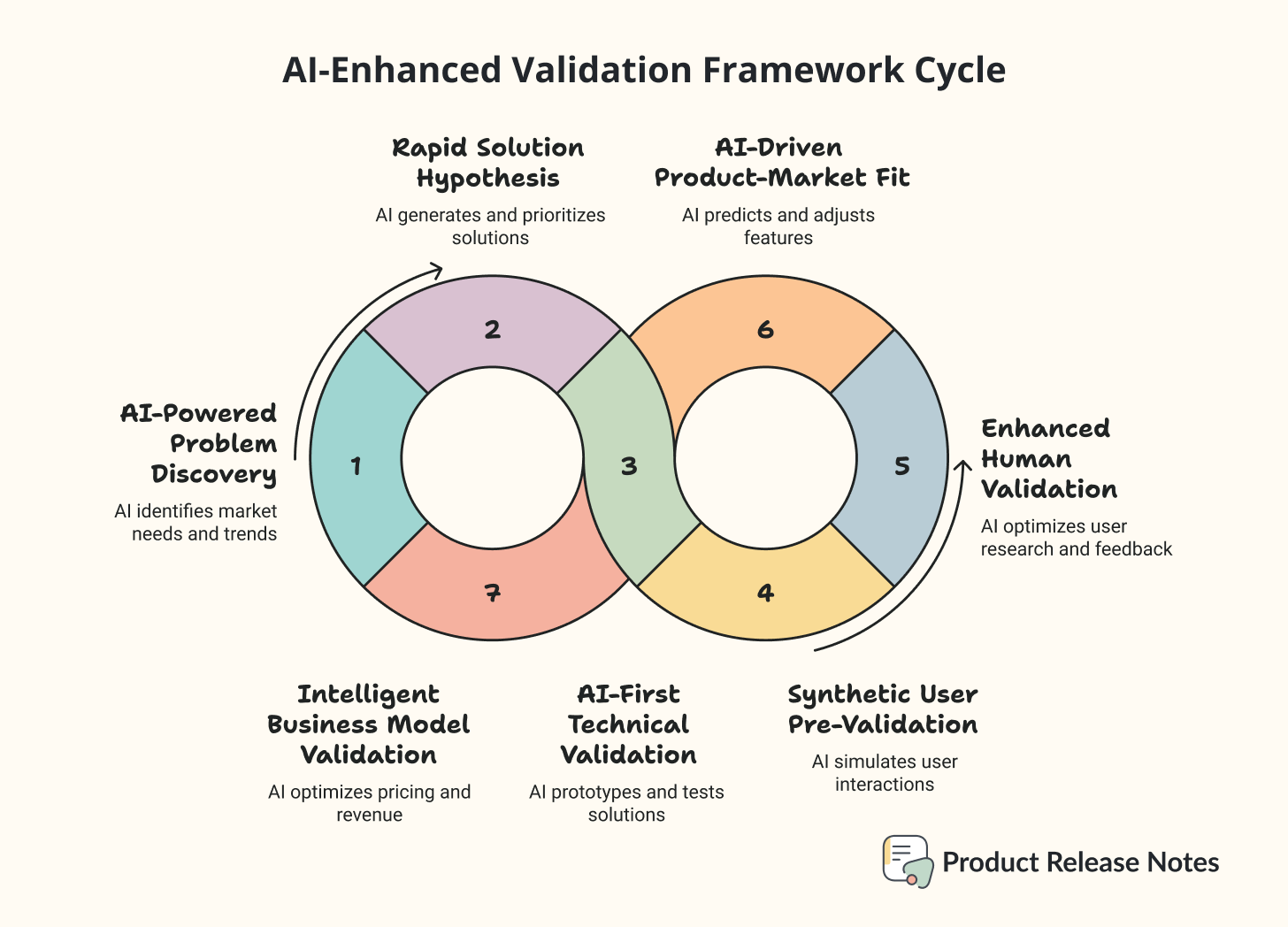

The Nielsen Norman Group Validation Framework

Nielsen Norman Group research shows that synthetic users work best when you treat them as “hypotheses that need testing” rather than final answers. Here’s exactly how I implemented their recommended framework:

1️⃣ Phase 1: Synthetic User Hypothesis Generation (Week 1)

Created 7 detailed personas using AI research

Conducted simulated interviews with 15 strategic questions

Identified 12 critical UX gaps and trust barriers

Generated specific hypotheses about user needs and behaviors

Stage complete ✅

2️⃣ Phase 2: Platform Optimization (Week 2)

Fix identified UX issues based on synthetic feedback

Add missing features that multiple personas requested (evaluating if this is necessary for our first stage)

Refined messaging for different user segments

Implement trust signals and safety indicators

Stage in progress 🏗️

3️⃣ Phase 3: Real User Validation (Week 3-4)

Recruit real users based on synthetic personas

Use synthetic insights to craft focused interview questions

Validate which synthetic predictions were accurate

Discover new insights that AI couldn’t generate

Stage not started -

What Synthetic Users Got Right (And Spectacularly Wrong)

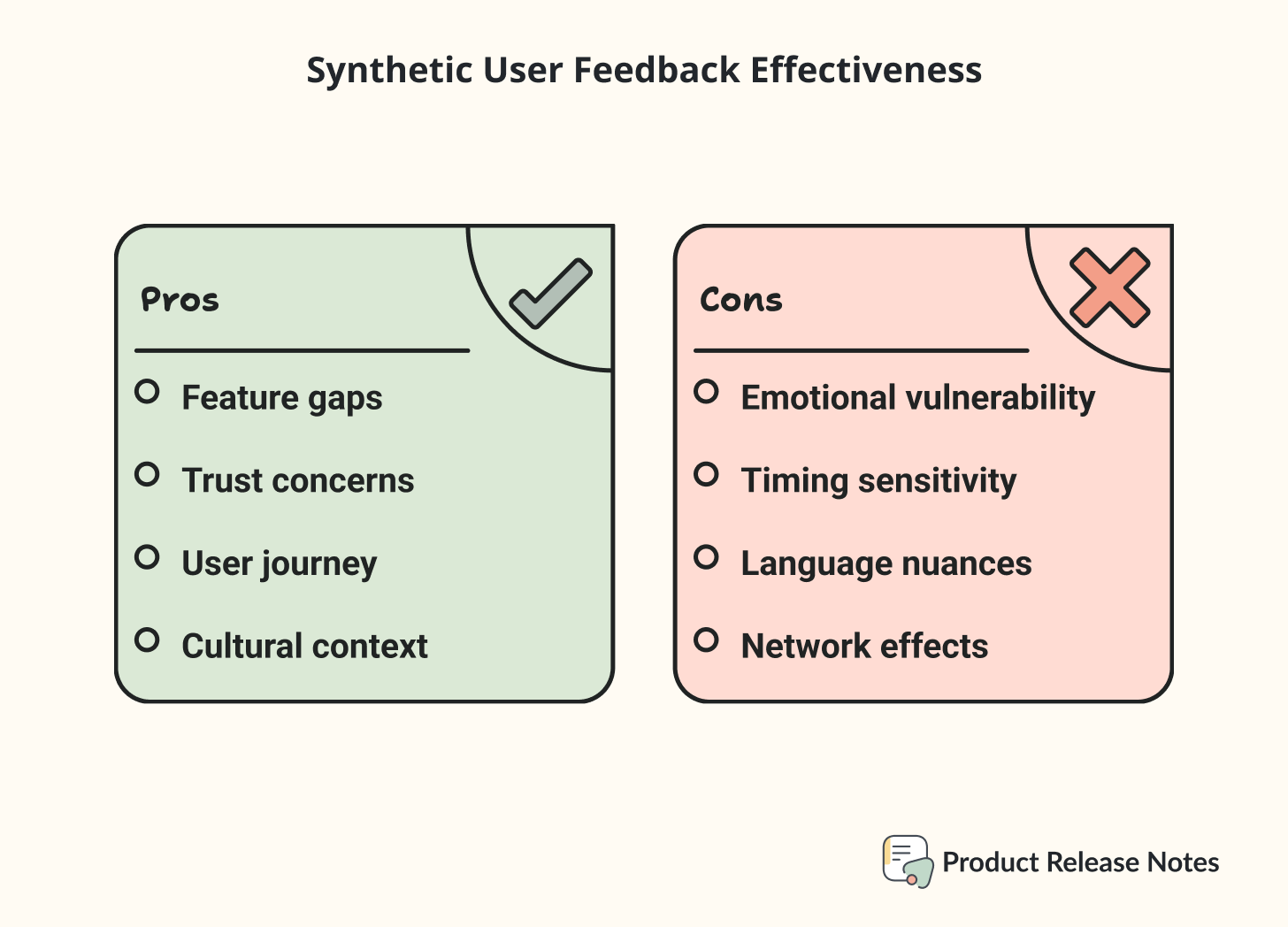

The synthetic research was remarkably accurate on functional needs but missed crucial emotional and cultural aspects:

Synthetic Users Nailed:

✅ Feature gaps: Missing community preview, expertise matching, filtering needs

✅ Trust concerns: Anonymity requirements, content quality worries

✅ User journey problems: Registration barriers, onboarding confusion

✅ Cultural context needs: LATAM-specific workplace dynamics

Synthetic Users Missed:

❌ Emotional vulnerability: The real fear of judgment was deeper than predicted

❌ Timing sensitivity: When women actually seek advice (crisis vs. proactive moments)

❌ Language nuances: How Spanish vs. English choice affects trust levels

❌ Network effects: How women actually discover and share platforms like this

This validates Nielsen Norman Group’s findings: synthetic users excel at identifying functional problems but struggle with complex emotional and behavioral patterns.

Synthetic people have never been alive! So it is to be expected that they have never had the same experiences as a normal human being. And even if the scenario is presented with an indication, for now it is not possible to generate empathy or sympathy towards them.

The Real User Research That’s Coming Next

Based on the synthetic insights, I’m now preparing to conduct real user interviews over the next few weeks. But here’s the difference: instead of starting from scratch, I’ll be armed with specific hypotheses to test.

The synthetic research has already shaped my real user interview strategy.

Instead of broad questions like “What do you think of this platform?” I can now ask targeted questions like:

“When you’re deciding whether to post a vulnerable workplace situation, what specific concerns come to mind about anonymity and safety?”

“How important is it to see the expertise level of people giving advice before you trust their suggestions?”

“What cultural workplace dynamics would make you hesitate to share certain types of challenges, even anonymously?”

The real user research will focus on validating which synthetic predictions were accurate and uncovering the emotional and cultural complexities that AI couldn’t predict.

Apart from drawing questions from “The Mom Test” book by Rob Fitzpatrick, I’ll be able to validate more behavioral and specific questions to validate this idea.

Why This Hybrid Approach Makes Sense

The synthetic research already identified major functional problem, some points I was able to fix almost immediately. When I do conduct real user interviews, participants will encounter a platform that already addresses basic usability concerns.

This means real user feedback can focus on the deeper questions synthetic users can’t answer:

Emotional barriers to sharing vulnerable information

Cultural nuances in workplace advice seeking

Trust building mechanisms that actually work

Community dynamics that feel authentic vs. artificial

The Current Project Status

Right now, I have:

✅ Functional platform built and deployed

✅ Synthetic user insights identifying 12 critical UX improvements

✅ Research framework ready for real user interviews

🔄 Updated platform addressing major synthetic user concerns

🔄 Real user research starting in the next 2-3 weeks

What I’m expecting to learn from real users:

Which synthetic predictions were accurate vs. completely wrong

Emotional and cultural barriers that AI couldn’t predict

Language and messaging that builds authentic trust

Community features that feel valuable vs. overwhelming

My ultimate test: Does synthetic research make real user research more valuable and efficient, or is it just an elaborate way to avoid talking to humans? 🤔

The Bigger Picture

Democratizing User Research

Regardless of how the real user phase goes later on, this methodology has already demonstrated something important: sophisticated user research techniques are now accessible to founders without UX budgets or expertise.

The synthetic research phase cost $0 and took one weekend. Traditional persona development and user research would have required weeks and thousands of dollars.

This shift has profound implications for underrepresented founders who historically faced higher barriers to understanding their users.

What Happens Next

Over the next weeks, I’ll be conducting real user interviews to validate the synthetic insights (of course once I polish the platform! 😅). The results will determine whether this hybrid approach actually works or if I’ve just created an elaborate way to delay real user feedback.

The decision framework I’m building will evaluate:

How accurate synthetic predictions were vs. reality

Whether the hybrid approach leads to better real user insights

If this methodology can be systematized for other founders

Whether the platform itself has genuine product-market fit

Most importantly: I’ll share the honest results, whether they validate this approach or prove it was a waste of time.

Have you tried synthetic user research in your own projects? What methods have you used to validate ideas before involving real users? I’d love to hear about your experiences and what you’ve discovered.

Later on: The real user research results and whether synthetic users actually predicted what women need from anonymous career platforms, or if AI completely missed the mark.

Love the detail and insights thanks for the citation 🙏🏻

This is a brilliant example of using AI to save time and focus real user research on what truly matters. Synthetic users catch the obvious UX gaps, and real users reveal the emotional and cultural nuances, best of both worlds!

For AI trends and practical insights, check out my Substack where I break down the latest in AI.