How StackDigest Is Solving The Newsletter Overload Problem With AI and Zero Marketing

Today, we have a guest whose work I personally admire. Many of us follow her on Substack. Please welcome Karen Spinner, creator of StackDigest and author of Wondering About AI who never ceases to surprise us with her journey.

Two weeks on Substack and Karen Spinner had a problem.

Actually, she had 200 problems, all sitting in her inbox. Each one was a newsletter that she genuinely wanted to read. Keeping up with them was becoming a full-time job.

Most people would either unsubscribe or let the backlog pile up until it felt hopeless (like me!). Yet Karen did something different. She built StackDigest, an AI-powered newsletter digest tool with 111 users that cost exactly zero dollars to grow through marketing.

I’ve been using Stack Digest for the past couple of weeks, and it’s been so useful for finding new voices on Substack and elsewhere that I hadn’t seen before.

So I wanted to understand how she did it. Not just the growth tactics, but the thinking process behind every product decision. How she validated the idea, how she used AI to build faster, and what she learned about users along the way.

This is the first Product Intelligence Interview for Product Release Notes, where I dig into the strategic thinking behind products that solve real problems for real people and also look at the real struggles behind the scenes of building an early-stage product.

The Problem Nobody Was Talking About

Q: Walk me through the exact moment you realized StackDigest needed to exist.

Karen: After two weeks on Substack, I realized that I had 200+ newsletter subscriptions, and my inbox was getting killed with newsletter articles and notifications.

It sounds simple when you say it like that, but it’s a problem so many of us have. We subscribe to newsletters because we want to read them, but then they take over our lives. The solution most people choose is to unsubscribe, which means missing out on content we actually value.

Q: How did you test whether other people had this same problem before building?

Karen: I wrote a Note about a hypothetical solution and got what was (for me) an enthusiastic response. When it got 104 likes and 28 replies, more than any other Note I’d published, I figured I was onto something.

💡 Key Takeaway for PMs: Before writing a single line of code, validate your idea where your target users already are. Karen’s simple Substack Note took minutes to write and gave her the confidence to invest weeks building a solution.

The Build-Share-Learn Cycle

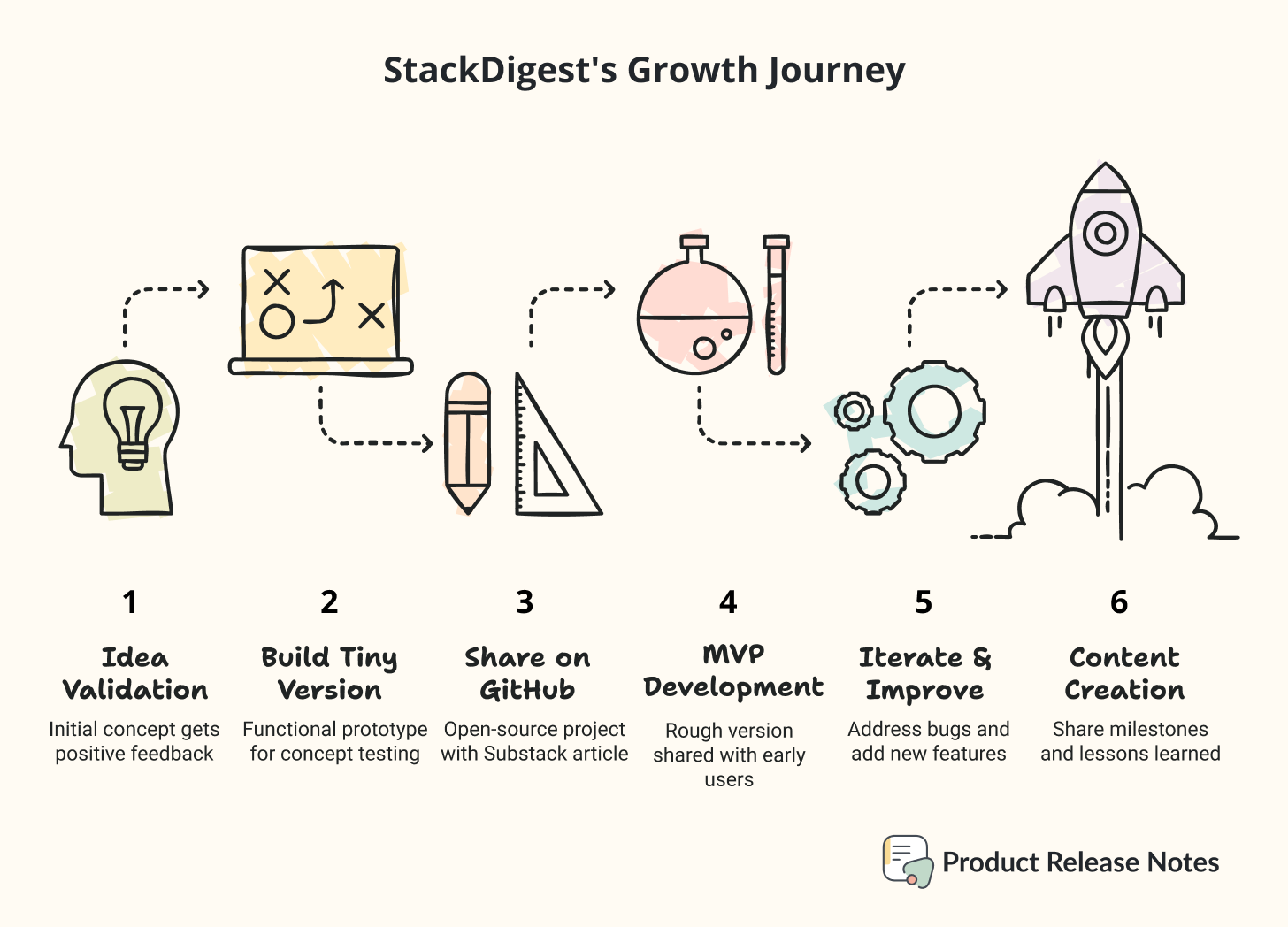

What happened next is a masterclass in lean product development. Karen didn’t disappear for six months to build the perfect product. She built in public, shared early, and learned constantly.

Q: Tell me about your growth timeline. How did you go from idea to 111 users?

Karen: Honestly, the 111 number implies more activity than there actually is, based on what I’ll share later about how users are actually interacting with the tool. Here’s how development and growth have progressed:

Month 1: Subscribed to 200+ newsletters, wrote Note about problem, got strong response

Week 2-3: Built Python prototype, wrote detailed article with GitHub code

Week 3-4: Set goal to build MVP in 5 days, succeeded despite bugs (including the dreaded extra space in API key)

Week 4-6: Shared with initial beta testers (10-15 people from comments), began iterating based on feedback

Week 6-8: Added vector database, semantic search, Theme Radar analytics, more digest formats. Grew to 85 beta users.

Week 8-10: Set up user survey and tracking via Google Analytics. Up to 111 beta users. However, I also discovered that a lot of these new users, who found me from LinkedIn, were actually researching the tool so they could pitch me development and SEO services.

The Zero-Dollar Growth Playbook

Q: What specifically worked to get you to 111 users without any marketing budget?

Karen: I can break it down into 10 things:

Before I built anything, I wrote a Substack Note about my idea. It was just a one-liner describing what I was thinking of building. When it got 104 likes and 28 replies, I figured I was onto something.

I built a tiny version of the idea. It wasn’t pretty, and didn’t even have a front-end, but it was just functional enough to test the concept.

I shared the tiny project on GitHub and wrote a Substack article about it. I tagged everyone who’d commented on the original note, encouraging me to build the project.

I built an MVP in one week. It was rough, but I shared it anyway, along with a Substack article explaining how I saw it evolving. I tagged everyone who had commented on the previous article.

I shared my roadmap doc with beta users and acted quickly to address bugs and add new features. I was (and always try to be) honest about the tool’s progress and where it may currently fall short.

I kept writing Substack articles about major milestones and everything I learned along the way. I shared wins as well as failures and how I handled them.1

I raised my hand enthusiastically whenever other Substack writers offered to feature me and StackDigest in their publications. I said yes to everyone, whether they had tens of subscribers or thousands.

I created content using material discovered or made by the tool, including lots of Notes as well as a “5 under 500 subscribers” series that publishes digests based on smaller Substack newsletters.

I started posting about StackDigest on LinkedIn a few days ago. Again, as noted above, many of these users turned out to be more interested in pitching me their services than using the tool.

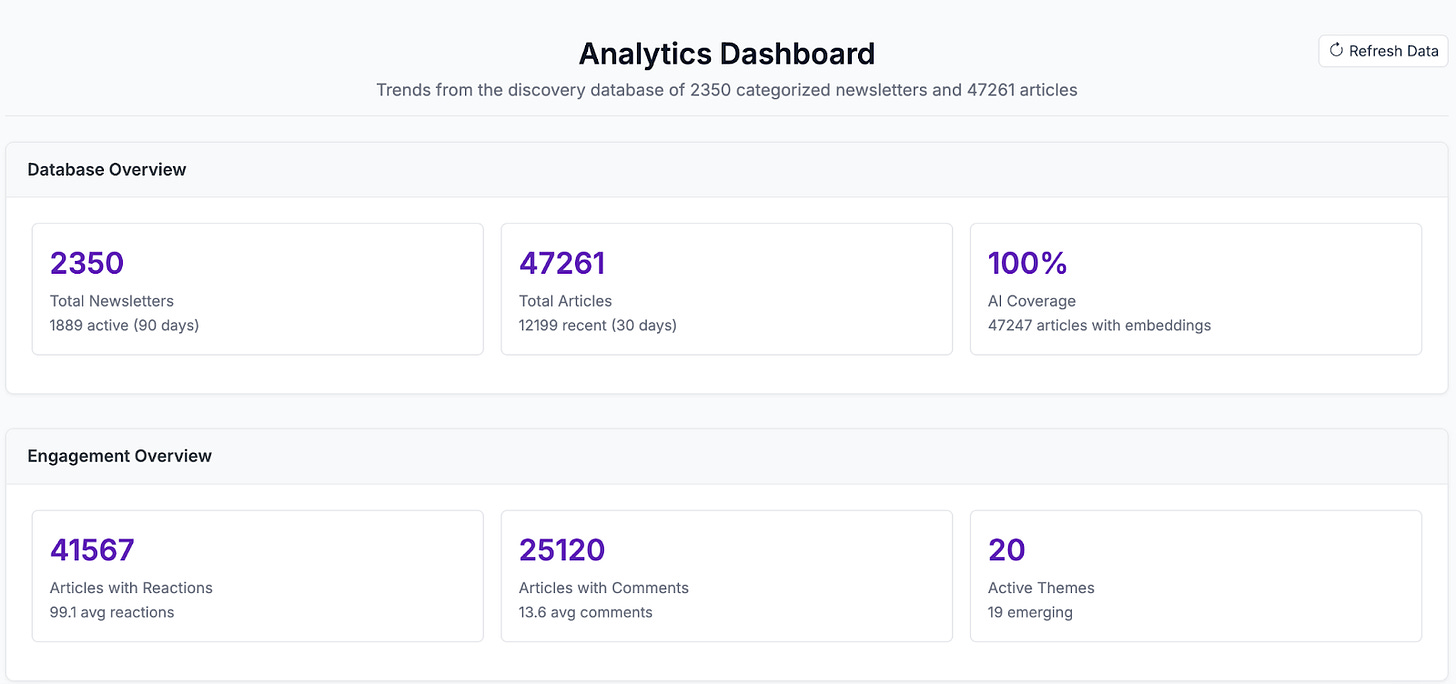

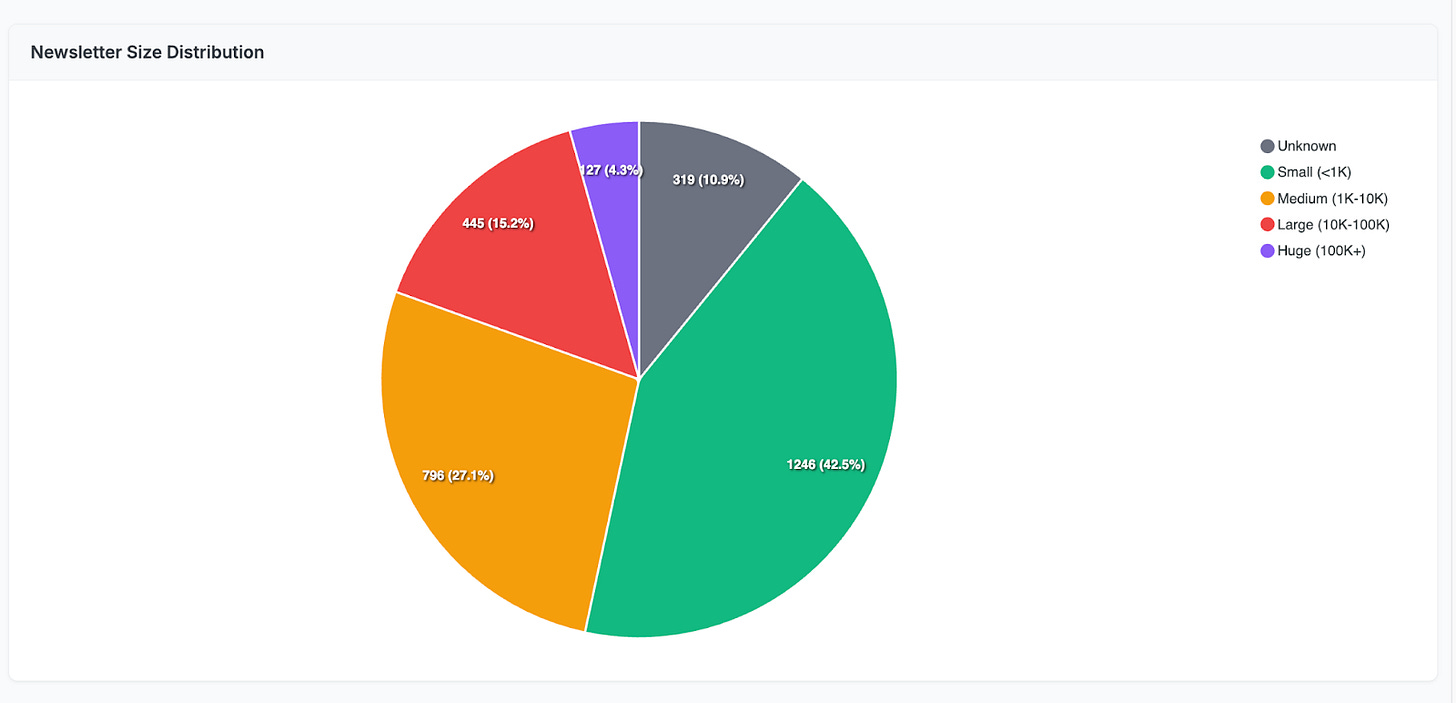

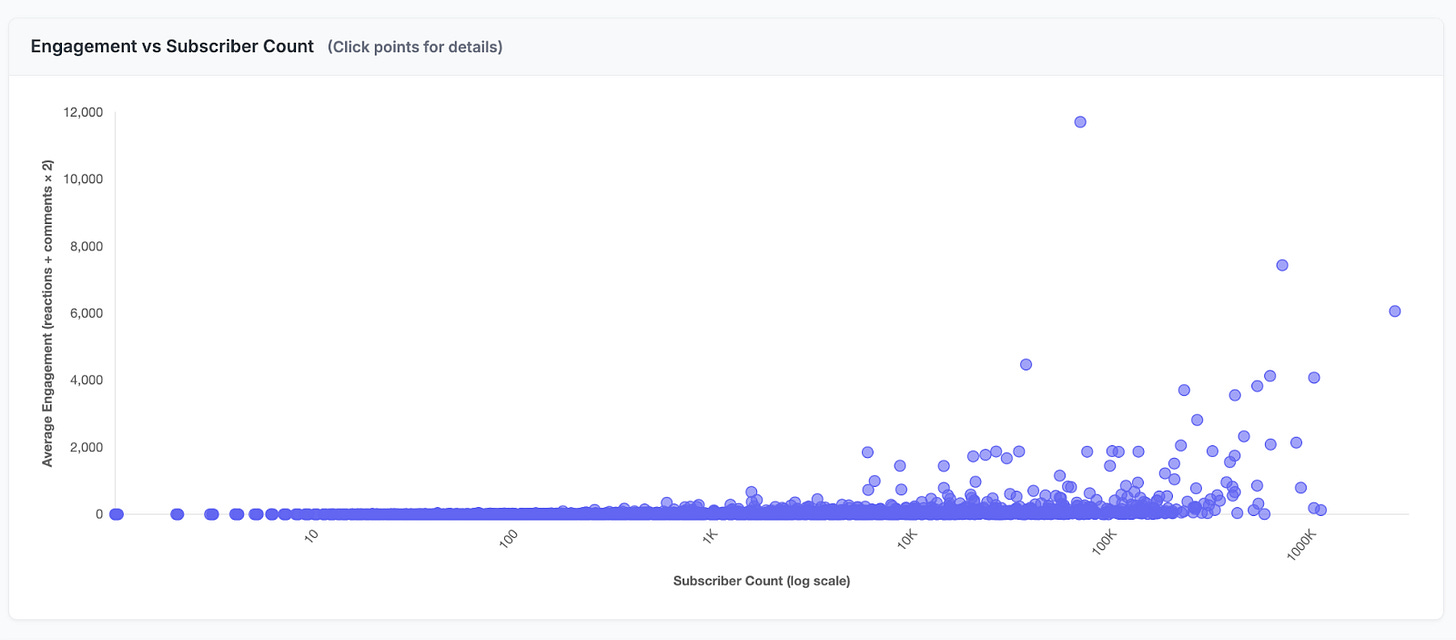

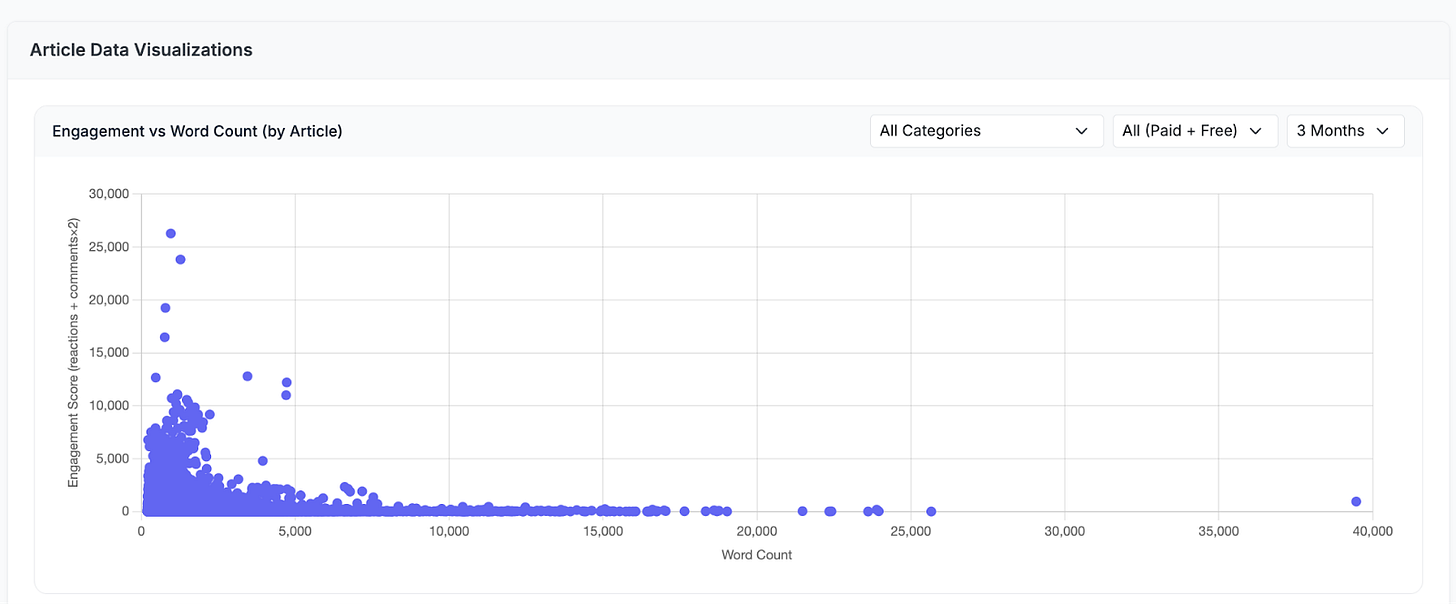

I shared interesting findings based on my analysis of the database of 2K newsletters and 39K articles that powers the discovery and analytics features in StackDigest. This generated a lot of new sign-ups and included people who only want to look at analytics and have no interest in the rest of the tool.2

What stands out to me is the consistency. Karen wasn’t just building in public for visibility. She was building a community of invested users who felt ownership in the product’s success because they’d been there from day one.

💡 Key Takeaway for PMs: Growth without marketing budget requires giving people reasons to care before you ask them to use your product. Karen documented her journey so thoroughly that users were invested in her success, not just the tool’s functionality.

The AI Reality Check

Here’s where it gets really interesting. Karen is known for “vibe coding” with AI, but her experience isn’t the Silicon Valley hype version. It’s the real version, with production failures and costly rewrites.

Q: You switched from Anthropic Console to Claude Code. What made you realize you needed to change?

Karen: I was cut-and-pasting code from Sublime into Anthropic’s Console and paying via API. This was initially great because I wasn’t locked into a subscription. But, as I started coding more frequently, I was starting to see unexpectedly high bills. Also, cut-and-paste became more unwieldy as my code base grew. Ultimately, I switched to Claude Code3 and was 5-10X faster than before because I wasn’t cut-and-pasting (and fixing lots of cut-and-paste induced indentation errors).4

Five to ten times faster. That’s not a marginal improvement. That’s a fundamental shift in how quickly you can iterate and respond to user feedback.

Q: Give me a concrete example where AI tools completely failed you.

Karen: AI is optimized to build “code that works” not “code that works in production.” When I set up semantic search logic based on sentence transformers I installed locally from Hugging Face, Claude did not warn me that this model plus all of its dependencies like PyTorch would blow through my Heroku “slug,” which is the maximum amount of disk space allotted for my code and dependencies. So I tried to install sentence transformers in production, and it caused all kinds of fun errors and crashes. I had to rewrite all the relevant code to use OpenAI endpoints to create embeddings and run semantic search logic. This took about a day and a half to do and test.5

This is the lesson nobody talks about in the AI hype cycle. AI coding tools are incredibly powerful, but they optimize for making code work right now, not for making code that scales in production. As a PM, you need to understand this distinction.

💡 Key Takeaway for PMs: AI coding tools won’t warn you about production constraints. You need to understand your deployment environment’s limitations and explicitly communicate them to your AI coding assistant.

Q: How do you think about AI costs when building product features?

Karen: Hosting and Claude Code are my biggest expenses so far, and they are not insignificant. While API costs for creating embeddings, semantic search logic, and writing summaries have gone up as I’ve added users, it’s still pretty minimal (under $10/month). I have alerts set up if API costs jump a lot, mostly because that could represent a security breach.

Think about that. StackDigest processes thousands of articles, generates personalized summaries, and runs semantic search across a database of 47,000 articles. The AI costs? Less than $10 per month.

When Karen originally processed 30,000 articles to create her vector database, it cost 36 cents using OpenAI’s API.

The UX Challenge Nobody Sees

Building with AI creates a unique design challenge. How do you give users control without overwhelming them? How do you make AI feel helpful instead of like a black box they can’t trust?

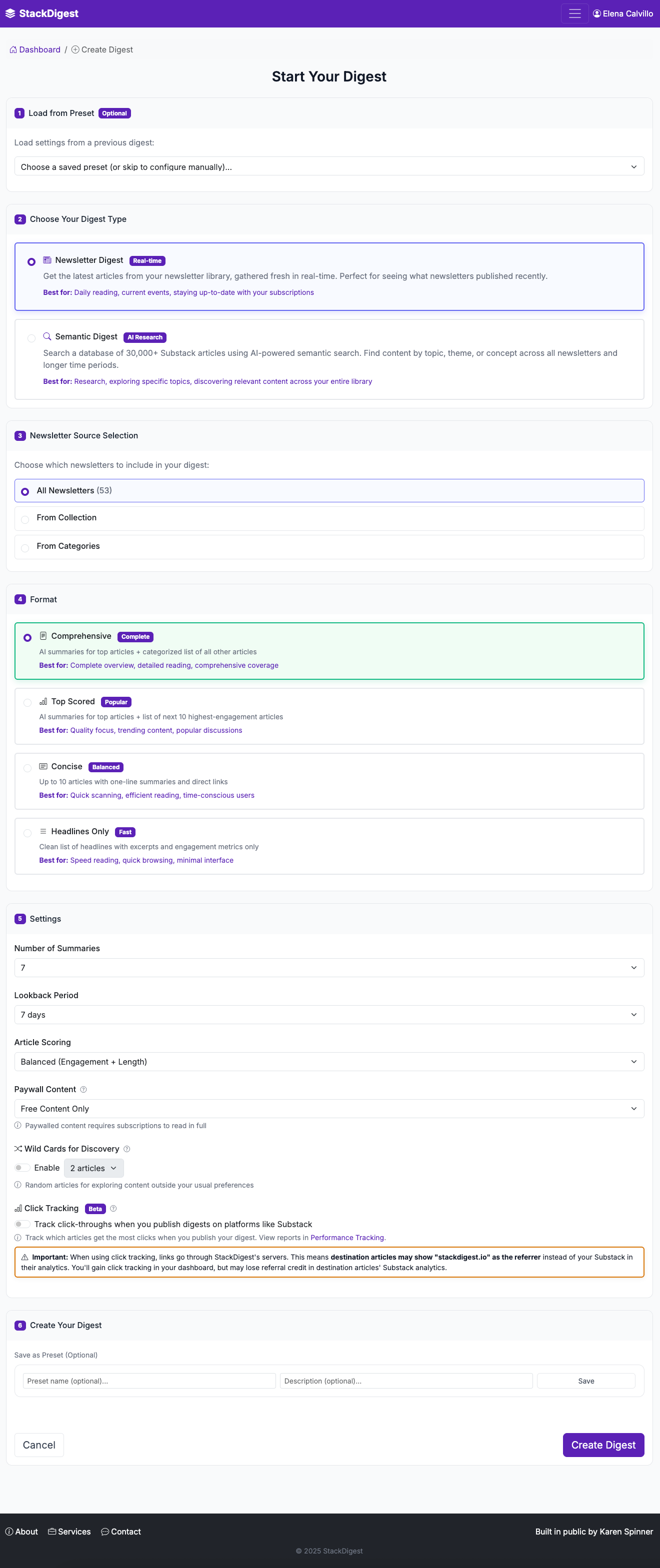

Q: What’s the most challenging UI decision you’ve faced when designing around AI features?

Karen: I don’t think the presence of AI in the back-end necessarily means features are complex. For example, the new Analytics feature is really simple from a UI standpoint, even though, in some cases, complex analysis is happening in the back end. The digest generation screen has been the most challenging to get right, because there are so many user-configurable parameters (format, look-back period, scoring model, etc.). I find there’s generally a tradeoff between flexibility (everyone gets what they want) and simplicity (easy to use). It’s still a work in progress!

Here’s a quick demo showing how to create your first digest:

This tension between flexibility and simplicity is something every PM faces. With AI features, it’s even more complex because users don’t always understand what’s happening behind the scenes.

Q: How do you help users understand what the AI is doing?

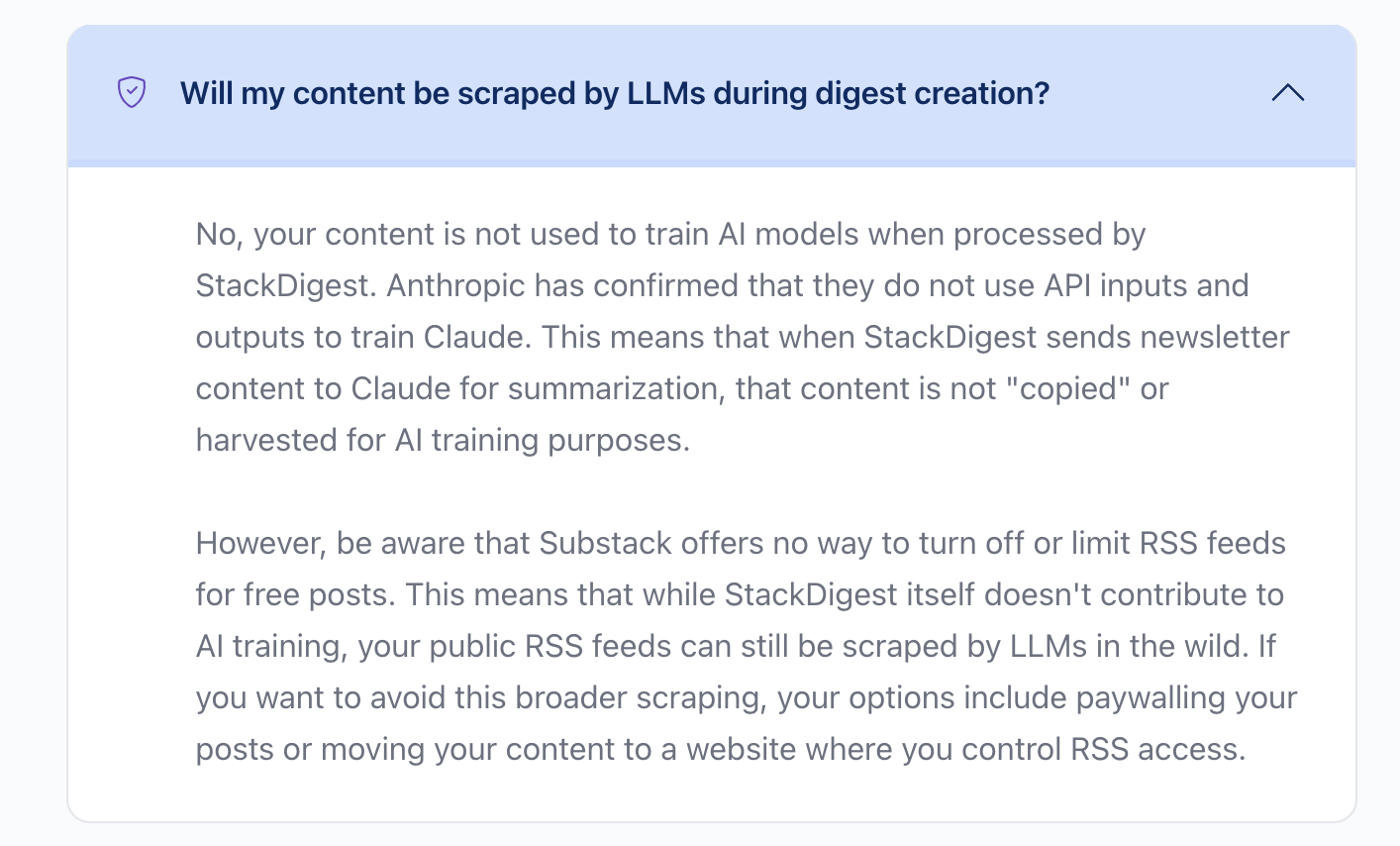

Karen: Generally speaking, I try to be as transparent as possible about how the tool operates so people can decide whether or not it makes sense for them. In one case, I ran into someone who was worried that article content passing through Anthropic’s API would be used as training data. This is NOT the case according to Anthropic’s TOS for the API. I added this info to the FAQ.

Transparency builds trust. When users understand what’s happening with their data and why the AI makes certain choices, they’re more likely to adopt and stick with your product.

What Users Actually Do (Versus What You Think They’ll Do)

One of my favorite parts of talking with Karen was hearing about the unexpected ways people use StackDigest. This is where product intuition meets reality.

Q: What usage patterns surprised you most?

Karen:

Competitor tracking: A couple of folks are using the digests to keep track of industry competitors. Completely unexpected use case.

Interest in analytics: I’m still trying to qualify this, but it looks like more users are browsing analytics than generating digests.

Digests with 400+ newsletters: Several users have very large libraries.

The competitor tracking use case is brilliant. Instead of subscribing to competitor newsletters and cluttering your inbox, you add them to StackDigest and get periodic digests of what they’re publishing. It’s a use case Karen never anticipated but that makes perfect sense in hindsight.

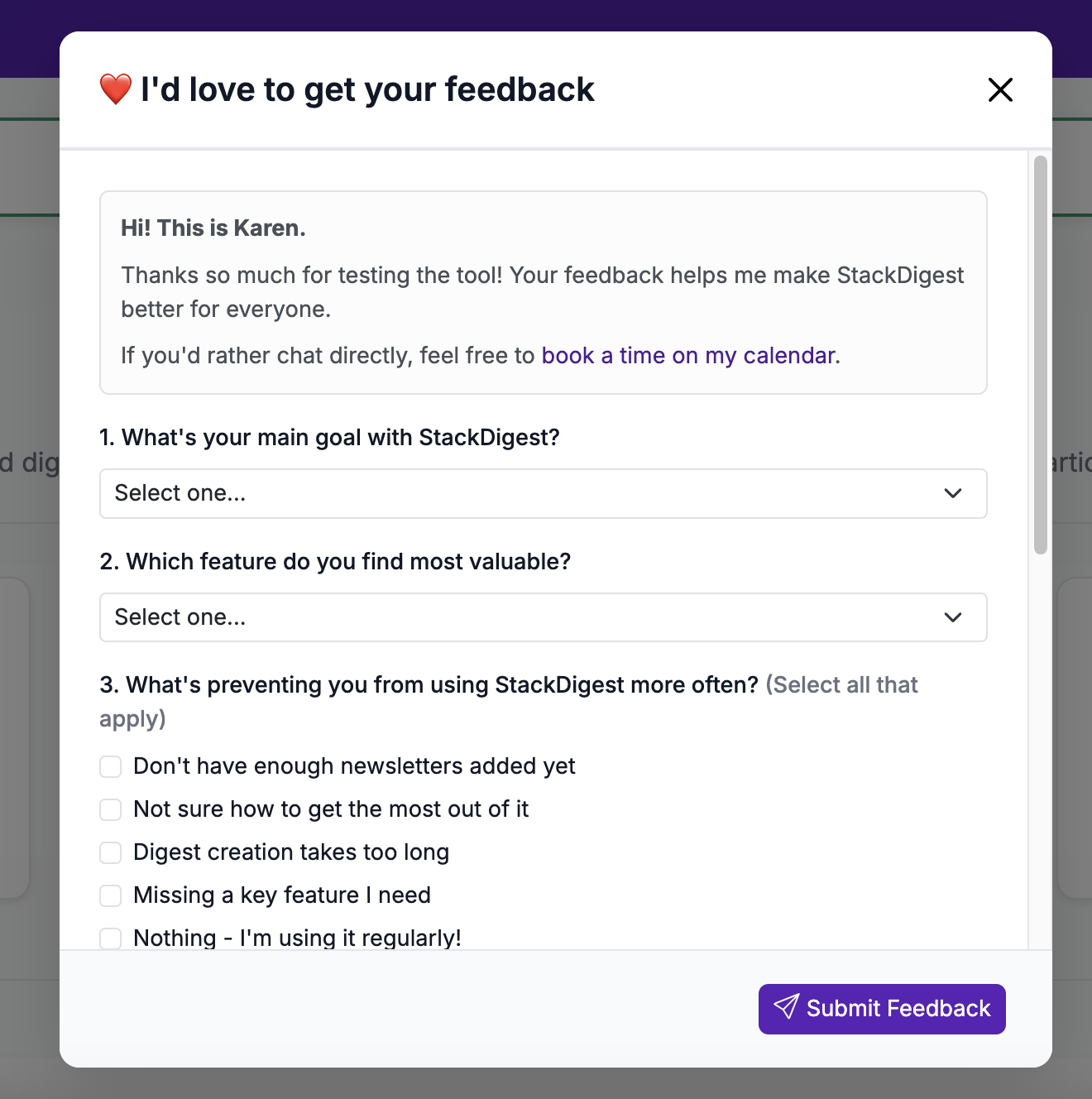

💡 Key Takeaway for PMs: Build instrumentation into your product early. Karen added Google Analytics and an in-app survey after launching, which means she was initially flying blind on actual usage patterns. The earlier you understand how people really use your product, the faster you can iterate toward product-market fit.

Additional Data and User Survey Results Reveal Significant Issues

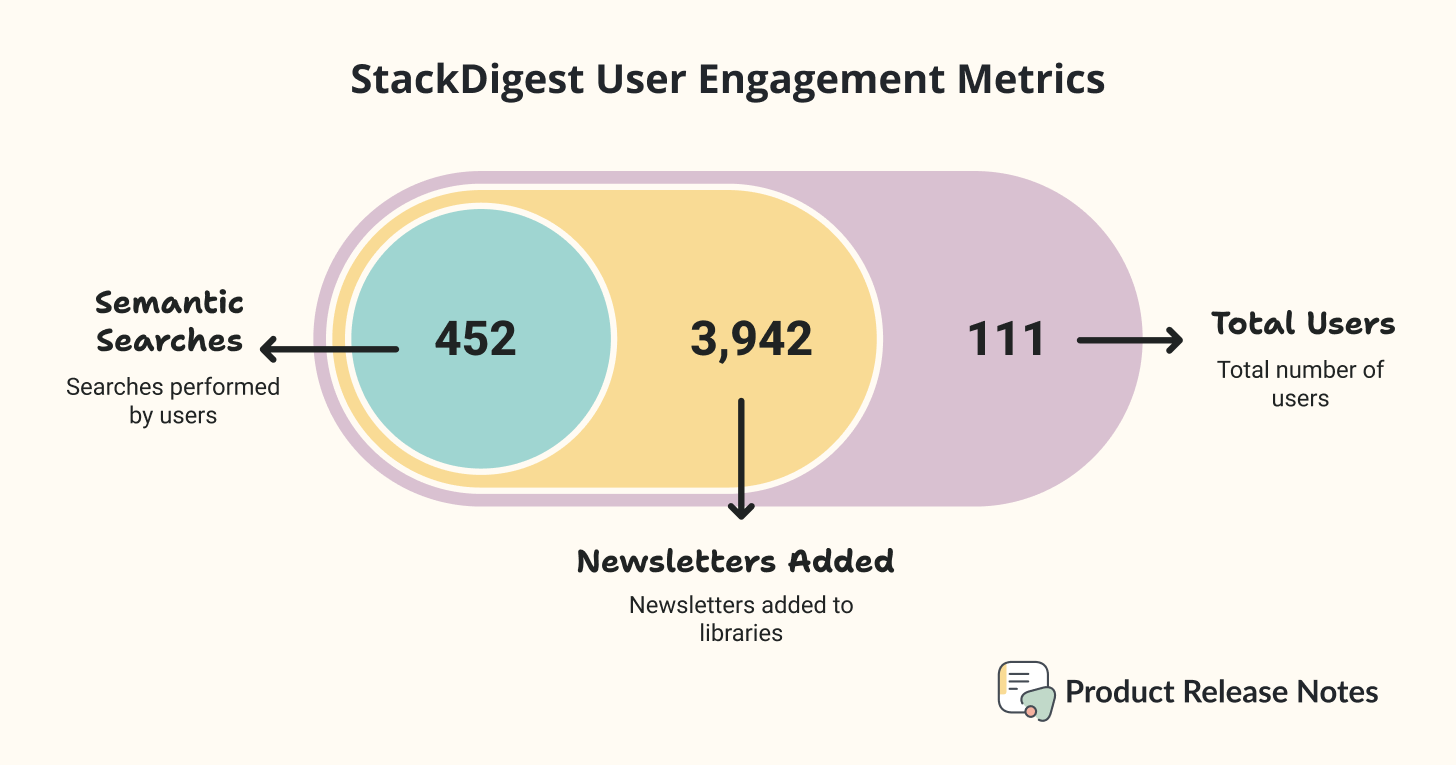

Currently, StackDigest has some positive top-level engagement numbers:

111 total users

3,942 newsletters added to user libraries (averaging 76 new newsletters added per day)

452 semantic searches performed across the discovery feature

The app has been running for 52 days

But these numbers are concealing some significant issues. A few days after we talked, Karen followed up with some new learnings that came from a closer look at usage patterns, results of user surveys, and conversations with several active users.

Karen: To be honest, I was hoping for better news. My rationale for looking at the data and talking to users was to figure out if there was anything in the tool that people would be willing to pay for and if there were any enhancements I could make that might make a difference.

With one intriguing exception, the answer was no. But let’s break it down.

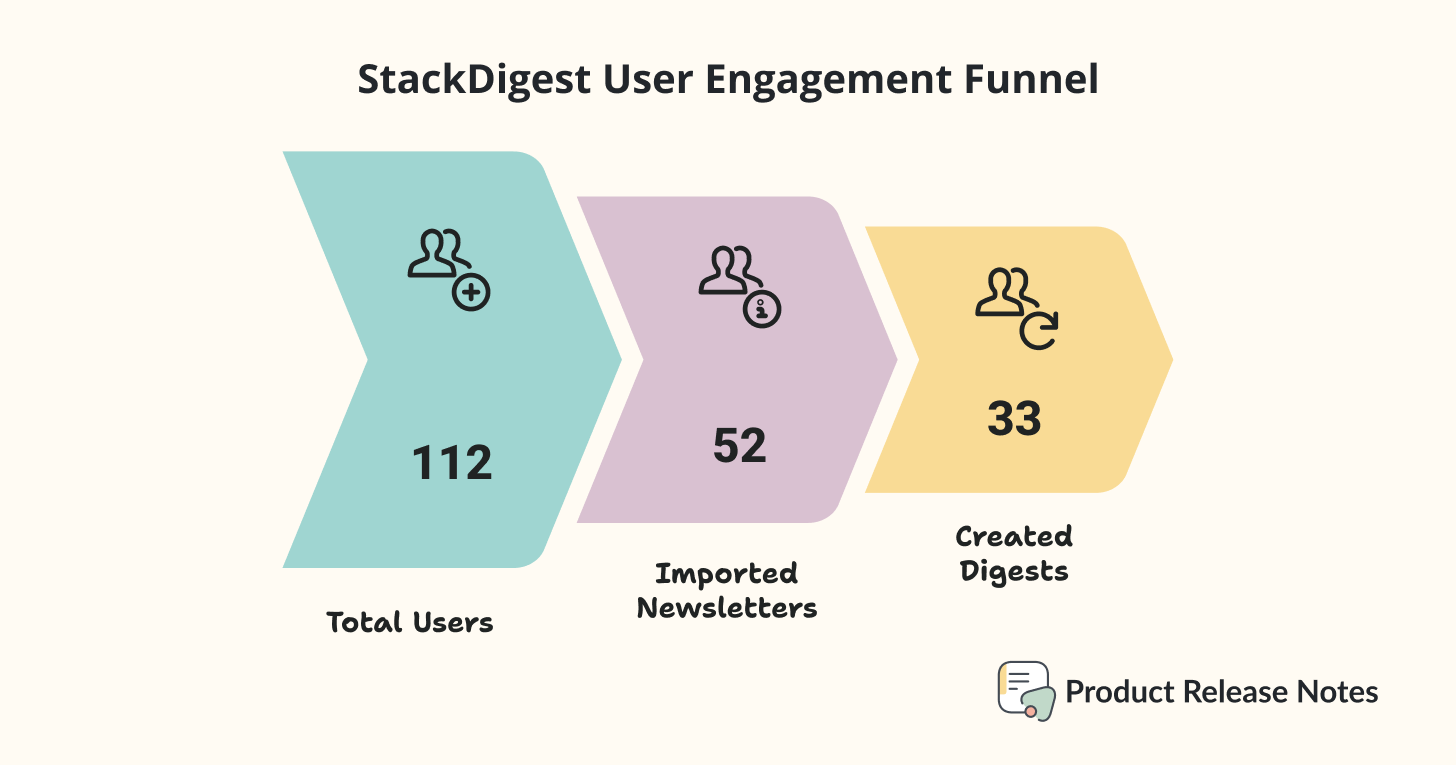

Total Users: 112 (excluding admin accounts)

Login Rate: 100% (all users logged in at least once)

This suggests the magic link sign up and login process was simple and straightforward.

Newsletter import and set up:

No newsletters imported: 60 users (53.57%)

This figure represents people who signed up and never even started setup. Some of them are probably users who went on to use the semantic search or analytics features and did not necessarily want the “digest” part of StackDigest.

Close to half of these people are new users who found me on LinkedIn and signed up for the tool as part of their market research before sending me a pitch for vibe coding, ghostwriting, and other services.

Imported but never created digest: 19 users (16.96%)

These people likely struggled to import newsletters, and follow-up conversations suggested that better onboarding and “one click newsletter import” from Substack would be helpful.

Imported and created digest(s): 33 users (29.46%)

These people actually generated digests. But their usage patten suggests a suboptimal user experience, “nice-to-have” but not essential functionality, or both.

After chatting with a few users who generated multiple digests, I received some helpful feedback for potentially reworking the digest generation UI to be easier to understand. But they also mentioned that a better UX would not make them more likely to pay for this service, which they see as something they might use casually every month or two to help them catch up on their reading.

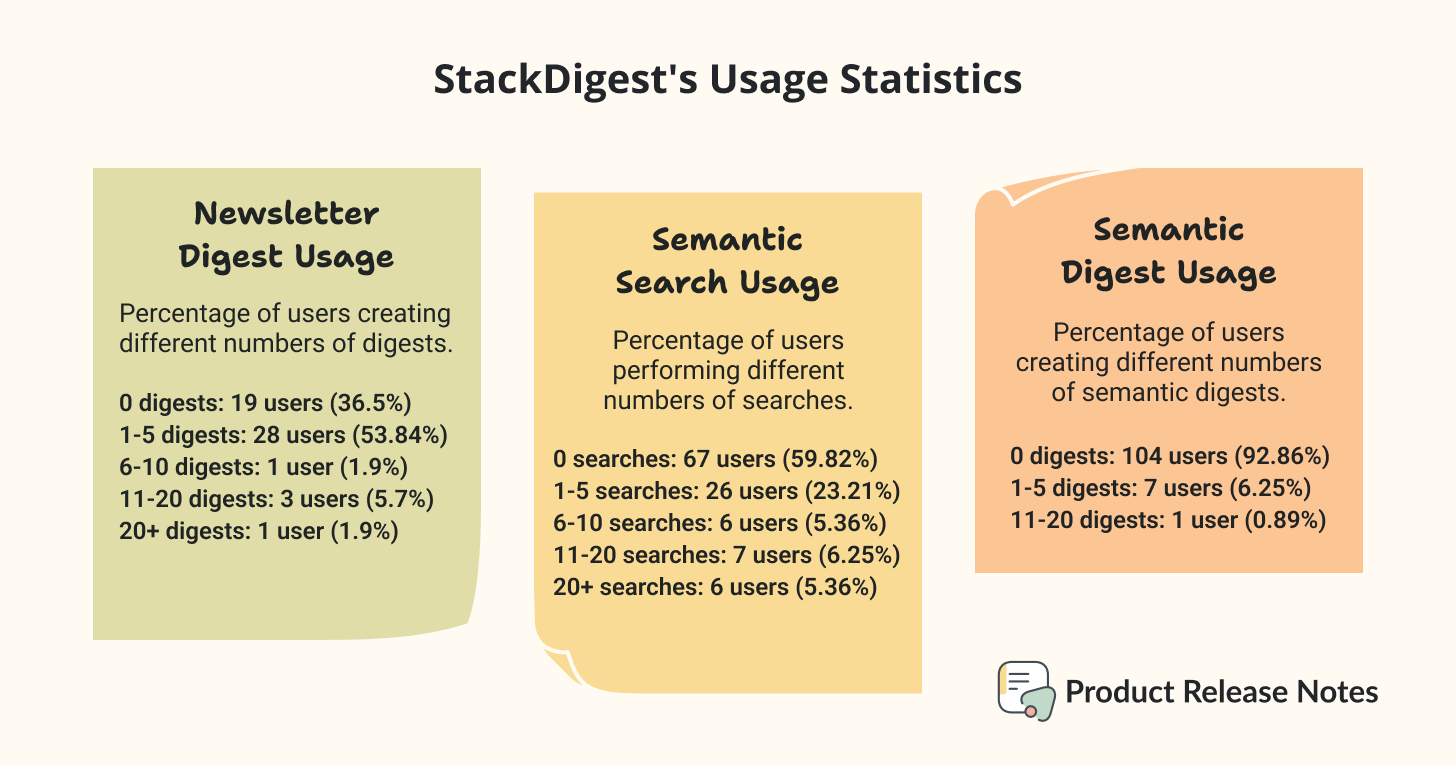

Newsletter digest usage (of users with newsletter libraries):

0 digests: 19 users (36.5%)

1-5 digests: 28 users (53.84%)

6-10 digests: 1 user (1.9%)

11-20 digests: 3 users (5.7%)

20+ digests: 1 user (1.9%)

Semantic search usage:

This feature, which was introduced a few weeks after the tool was launched, was used more often than the digest feature. And 19 people came back and used it again and again. Several users described it as “something Substack should really offer,” but didn’t think it was a feature they would pay for.

Two users mentioned that the search feature wasn’t sufficiently differentiated from Google Search, especially when augmented with LM Notebook.

0 searches: 67 users (59.82%)

1-5 searches: 26 users (23.21%)

6-10 searches: 6 users (5.36%)

11-20 searches: 7 users (6.25%)

20+ searches: 6 users (5.36%)

Semantic digest usage:

The semantic digest, which created a curated collection of articles based on semantic search results, was wildly underutilized. This might suggest users are unaware of the feature, that they’d rather pick and choose articles from semantic search results, or both.

0 digests: 104 users (92.86%)

1-5 digests: 7 users (6.25%)

11-20 digests: 1 user (0.89%)

Thoughts and next steps

This data suggests it’s very possible that I’ve built a “vitamin and not a painkiller.” And many users I’ve talked to were ultimately more excited about supporting me and my journey than actually using the tool.

Given all this input, I am thinking seriously about winding down the project.

However, there is one stone left unturned. The analytics feature I recently added to the tool seems to be getting more traffic than everything else combined. And, as a creator myself, I’m thinking that people just might be willing to pay for specific insights about their newsletters based on the data I’ve been collecting to support the search tool. (Thanks to Elena Calvillo, Karo (Product with Attitude) and Karen Smiley for the ideas and support!)

So I will keep the tool alive for the time being as I explore this idea.

The Strategic Questions Every PM Should Ask

As we wrapped up, I wanted to understand Karen’s broader thinking about AI in product development. Not just tactics, but strategy.

Q: If you were consulting for a PM who wants to add AI features to their product, what would your framework be?

Karen: I would ask what they are trying to accomplish from a UX perspective and then consider whether AI is the appropriate solution. AI isn’t the answer to everything.

This might be the most important insight in the entire interview. We’re in an environment where everyone wants to add AI to their product because it’s trendy. Karen’s approach is the opposite. Start with the user experience you want to create, then decide if AI is the right tool to create it.

Q: What has building AI-powered products taught you that traditional product development doesn’t prepare you for?

Karen: I am much more prescriptive with AI in terms of specifically defining user flows, experiences, and data structures. This is because I’ve learned a bit about software design best practices and find that AI, in its zeal to produce code that “just works right now,” doesn’t always follow them.

Q: Where do you see AI in product development going in the next 2 years?

Karen: More people building more products in less time. More tiny, bootstrapped startups. A few big security-related disasters as more vibe coded apps are launched at scale. There’s also the off chance the Internet will basically die if there’s no way to tell fake media from real.

That last point is sobering but important. As AI makes it easier to build products quickly, we’re going to see more products built without deep technical expertise. Some will be brilliant. Some will have serious security vulnerabilities.

Q: How do you think about competitive advantage when everyone has access to the same AI APIs?

Karen: Trust (e.g., how long has your org been around?). Storytelling. Personal networks and connections. “It’s who you know” times ten.

This is the future of product differentiation in an AI-powered world. When the technology is commoditized, what matters is trust, narrative, and relationships. Karen has built all three by sharing her journey publicly and being radically transparent about both successes and failures.

What This Means For You

If you’re a PM thinking about building something new, here are the key lessons from Karen’s journey:

Validate before you build. A simple post in a community where your target users already exist can save you months of building the wrong thing.

Instrument early, and use the data to keep validating as you build. Karen wishes she’d added analytics and user surveys earlier. Understanding how people actually use your product is more valuable than any assumption you can make.

Build in public. Karen’s growth to 111 users came entirely from documenting her journey and inviting people along for the ride. Every article she wrote, every failure she shared, every milestone she celebrated created another touchpoint for potential users to discover and care about StackDigest.

Remember that every audience is different. LinkedIn created a “fool’s gold” rush of new StackDigest users. Segment user behavioral analysis by source whenever you can.

Understand AI’s limitations. AI coding tools are powerful, but they don’t understand production constraints. Be explicit about your deployment environment and always test in conditions that mirror production.

Start with UX, not technology. Don’t add AI because it’s trendy. Add it because it creates a better user experience that would be impossible or impractical without it.

Karen’s building something genuinely useful, and she’s doing it in a way that more PMs should pay attention to. Not with massive funding or a large team, but with AI tools, transparency, and a real understanding of the problem she’s solving.

StackDigest can be found at stackdigest.io, and it’s currently free to test and use. Karen writes about her building journey at Wondering About AI, and you can find her on Substack as Karen Spinner.

Want to try StackDigest? Visit stackdigest.io and see how Karen is solving newsletter overload with AI. Follow her building journey at Wondering About AI.

Amazing! This is the type of content I joined Substack for!

Yet, it once again proves my experience that if you have 0 coding experience, you would hardly be able to build anything even remotely complex using AI.

StackDigest is a masterclass in AI-driven product building: zero-dollar growth, early user validation, and a focus on UX-first design. This shows that real AI leverage comes not from hype, but from understanding users, instrumenting behavior, and iterating rapidly on meaningful insights.