The Agentic AI Framework Every Product Manager Needs in 2026

Product managers are being asked to deploy AI features they don’t fully understand. Here’s the security and ethics checklist nobody gave you, with real examples of when to say yes and when to say no.

The feature launched on a Monday morning.

By Tuesday afternoon, the product manager who built it was in an emergency meeting with Legal, the CEO, and a very angry Head of Security.

The AI-powered customer service chatbot worked perfectly. It answered questions faster than the human team ever could. Customer satisfaction scores jumped 23% in the first six hours.

Then someone discovered the chatbot was pulling customer data from accounts it shouldn’t have access to. A single prompt could expose another customer’s purchase history, support tickets, even partial credit card information.

The PM hadn’t meant to create a security vulnerability. They’d been told to “add AI to customer service” and ship it before the competitor down the street launched theirs. Nobody asked about data access controls. Nobody mentioned security audits. Nobody said the words “responsible AI.”

The feature was pulled by Wednesday. The company issued a public apology by Thursday. The regulatory fine came six months later.

Here’s what nobody tells product managers: 83% of companies say AI is a top priority in their business plans, but only 39% of product managers received comprehensive, job-specific AI training. You’re being asked to become an AI expert overnight while your competitors ship features weekly and your executives ask why you’re moving so slowly.

The gap between what you’re expected to know and what you actually know isn’t just creating stress. It’s creating real security risks, ethical violations, and expensive failures that could have been prevented.

That’s the dual responsibility nobody prepared you for: building features that work AND don’t break ethics.

Why PMs Are Racing Blind

The Hidden Crisis in AI Product Management

Let me show you what’s actually happening right now.

98% of product managers are already using AI at work. That number sounds impressive until you realize that 66% of them admit to using unapproved AI tools. They’re not doing this because they’re reckless. They’re doing it because they’re drowning in pressure from every direction and nobody’s giving them a framework for making responsible decisions.

Executives are pouring money into AI features while PMs scramble to keep up. I recently wrote about how AI is changing 2026 product roadmaps, and the pressure to ship AI features is the #1 theme I’m seeing across every company.

But here’s what surprises me: the biggest barrier isn’t technology or budget. It’s that nobody’s teaching PMs how to evaluate AI features responsibly. Product managers are being set up to fail.

Where This Pressure Comes From

I’ve sat in enough product meetings to know exactly how this conversation goes.

👩🏻💼 From executives: “Our competitors added AI last quarter. Why don’t we have AI in our product yet?”

They’re not wrong to ask. The pressure to keep pace is real.

👨🏻💼 From sales: “We lost three deals this month because prospects asked about our AI capabilities and we had nothing to show them.”

Again, legitimate concern. Customers expect AI features now, not later.

👩🏼💻 From engineering: “We can build this AI feature in two sprints. Just tell us what you want.”

Sounds great, except they’re not asking about security reviews, ethical implications, or whether this AI should even exist.

🙍🏻 From yourself: “Everyone else seems to be figuring this out. Why am I the only one asking these questions?”

You’re not. You’re just the only one admitting it!

This is how features get shipped without proper evaluation. A product manager, caught between competing pressures and lacking a clear framework for responsible AI deployment, makes the fastest decision rather than the right decision.

The cost? The global average cost of a data breach is $4.44 million, and shadow AI incidents add an average of $670,000 to breach costs.

What PMs Think They’re Being Asked vs. What They Should Ask

Here’s the disconnect I see constantly:

❌ What executives say: “Add AI to this feature”

✅ What they actually mean: “Explore whether AI could improve this feature, and if so, how we can implement it responsibly”

❌ What PMs hear: “Ship AI features fast or fall behind”

✅ What PMs should hear: “Evaluate AI features thoroughly because one mistake could cost millions”

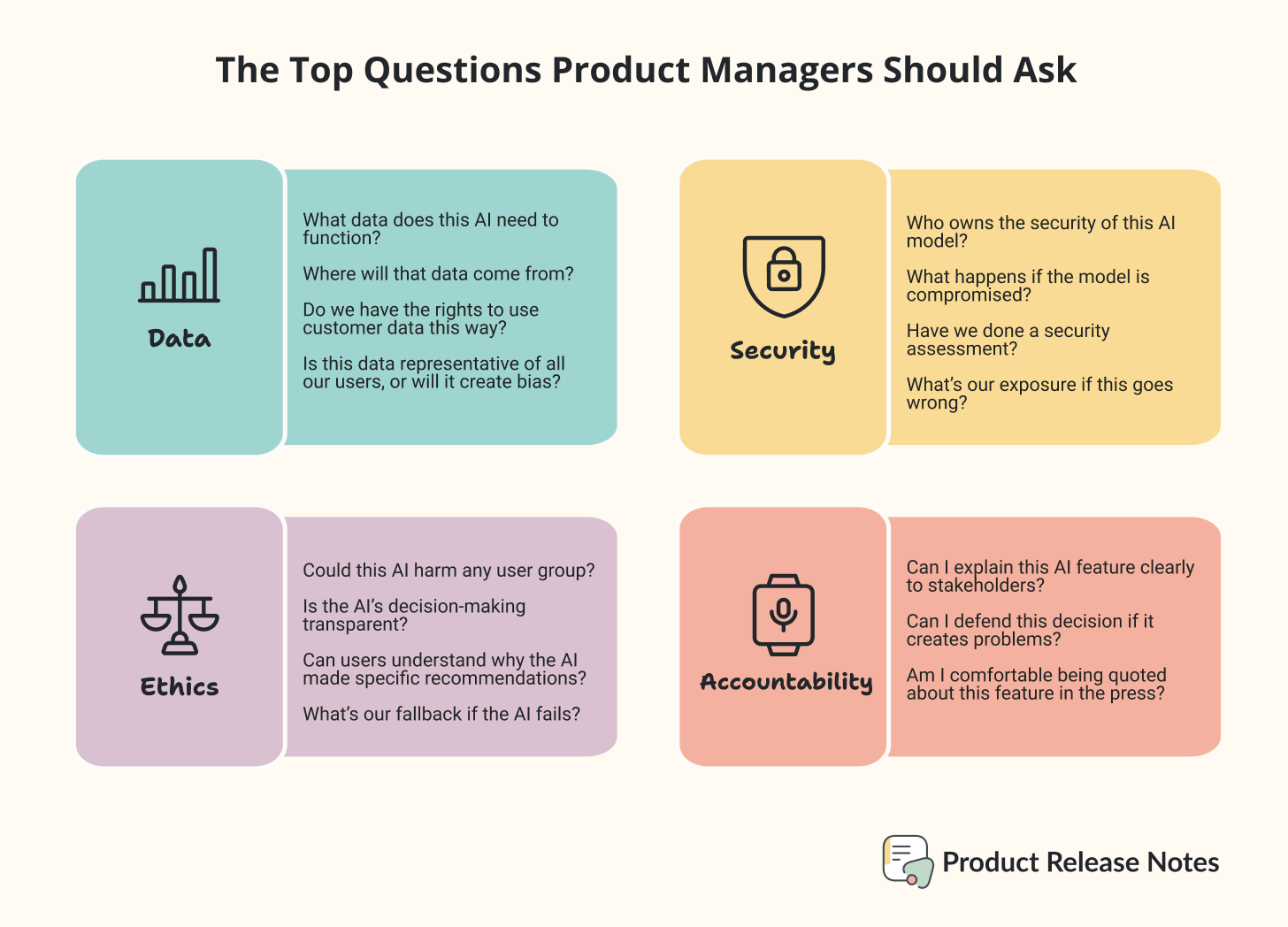

The questions most product managers aren’t asking:

About the data:

What data does this AI need to function?

Where will that data come from?

Do we have the rights to use customer data this way?

Is this data representative of all our users, or will it create bias?

About security:

Who owns the security of this AI model?

What happens if the model is compromised?

Have we done a security assessment?

What’s our exposure if this goes wrong?

About ethics:

Could this AI harm any user group?

Is the AI’s decision-making transparent?

Can users understand why the AI made specific recommendations?

What’s our fallback if the AI fails?

About accountability:

Can I explain this AI feature clearly to stakeholders?

Can I defend this decision if it creates problems?

Am I comfortable being quoted about this feature in the press?

These aren’t hypothetical questions. Look what happens when you skip them.

13% of organizations reported breaches of AI models or applications in the past year, and 97% of those organizations lacked proper AI access controls. These aren’t massive enterprises with complex systems. These are companies that rushed AI features without basic security measures.

The real crisis? 63% of breached organizations either don’t have an AI governance policy or are still developing one. They’re shipping AI features while still figuring out the rules.

This is where ’s expertise becomes critical. Because while I can tell you about the business pressure and stakeholder dynamics, she understands the security and ethical risks that most product managers don’t even know exist.

Disclaimer: This section was written by me, a human, with assistance from an AI writing tool for editing purposes. I am responsible for the final content and its accuracy.

How Product Managers Can Avoid Pitfalls When Lunching New AI Features

Everyone talks about AI, everyone wants to integrate it into their products, everyone expects speedy results. Gaining new users with a shiny new feature is seductive, but most product managers are being asked to ship AI features they don’t fully have the time to test or understand. And when you move fast without reflection, mistakes are inevitable.

The pressure on PMs is brutal, like Elena explained so well. You’re supposed to learn AI overnight, make decisions at lightning speed, and somehow anticipate every issue that might pop up. And when teams, desperate to hit deadlines, start using unapproved tools, the risks multiply.

It’s not just about security. Bias hides in the data fed to the models. Historical inequities are baked in, and suddenly your “smart recommendation engine” favors certain users while leaving others behind. And that’s exactly why diverse perspectives matter, you want to make sure to build a team with people from many different backgrounds because someone might spot a risk or bias that everyone else missed. Different experiences and ways of thinking help catch blind spots before they become disasters.

Transparency is often nonexistent, and if you can’t explain how the AI made a recommendation, you can’t defend it to stakeholders, users, or regulators.

These systems are far from being perfect, “hallucinations” happen all the time. I don’t like that term at all, it anthropomorphizes what’s really just a system error and it is used to describe when AI confidently generates wrong answers which can cause unexpected and dangerous consequences. Over-reliance on AI erodes human judgment, intuition, and empathy, the very things that make a product feel alive, human, and useful.

Responsible AI needs to be embedded in every decision you make about what to build, how to build it, and why. In one of my recent newsletters, I extrapolated 10 lessons from the EU AI Act, which is a great framework focused on making sure AI benefits humanity. Even as amendments are being made as we speak to the Act to make it more “business-friendly”, and we all know that means less guardrails, the core framework demanding human oversight, clarity on accountability at every stage, and mandatory transparency serves as a great blueprint.

You need, in fact, governance, documentation that’s accessible, and a culture that prioritizes people. And if you’re licensing an LLM from another company, you need to understand their ethical standards and how they’ll be embedded in your system. That’s the only way to keep customizing the model in a way that stays aligned with your ethical principles, what David Danks, Professor of Data Science, Philosophy, and Policy at University of California San Diego, calls “ethical interoperability.”

Ensuring ethical interoperability is one part of the equation, the other is making sure your team actually understands the systems they’re responsible for.

AI literacy is your superpower. If your team doesn’t understand the fundamentals of AI, its limitations, and the types of risks it carries, it won’t be a successful team. Training, workshops, reading, and discussion should be a living part of your company culture. When everyone knows what these systems can and can’t do, the decisions that prevent disaster become obvious.

Thoughtful product managers can build features that are fast and responsible. You can ship innovation without sacrificing ethics. Rushing, guessing, and crossing your fingers will cost you more than just money.

Start with this basic question, before you decide AI is the answer, validate that there’s a real, urgent problem worth solving, ask yourself: Do we really need AI for this, or are we just checking a box?

Again, reflecting and pausing might save you lots of trouble.

Companies need to invest in high-quality data, start small with pilots to build user trust through transparency and human oversight. Make sure every AI feature aligns with your product vision. Begin with one or two high-impact use cases in a pilot program before scaling up to a full launch.

Accept failures and pivot. Use an iterative approach to improve features based on real-world feedback.

A thoughtful approach will pay off in the long run with fewer legal headaches and fewer surprises. Everyone’s rushing to add AI to everything, so building it responsibly might actually be the real competitive advantage.

The AI Feature Evaluation Checklist You Need

After seeing these patterns repeatedly, we built a framework that addresses both perspectives. The business reality of shipping features quickly AND the security reality of not creating disasters.

Before You Say Yes to Any AI Feature, Answer These 5 Questions:

1. The Business Question

Why are we adding AI here specifically?

Not “because competitors have it” or “because executives asked for it.” What actual problem does AI solve that non-AI couldn’t solve better or more simply?

Write it down: “This AI feature will [specific outcome] which matters because [measurable business impact].”If you can’t complete that sentence, you don’t have a business case. You have a feature request based on AI hype.

🚩 Red flag: If the answer is “to say we have AI” or “because it’s trending,” stop here.

2. The Data Question

What data does this AI need, and do we have rights to use it?

You need to know exactly where your data comes from, make sure you use high-quality data, know what permissions you have, and whether it’s representative of your users. Biased training data can make your AI recommend the wrong things, exclude certain users, and amplify inequities. Consent isn’t optional, if you’re using customer information, it has to be allowed, secure, and should be compliant with privacy laws like the General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA).

⁉️ Ask: Could this dataset create bias? Could it unintentionally harm anyone?

3. The Security Question

Where is the model hosted and what’s our exposure if it’s compromised?

Every AI model is a potential entry point for attackers. Make sure access controls, authentication requirements, and vulnerability assessments are in place. Know exactly who can interact with the model, who can see its inputs and outputs, and what happens if it’s breached.

⁉️ Ask: Have we done a vulnerability assessment?

4. The Ethics Question

Could this harm any user group, and is the decision-making transparent

Check for bias, run fairness tests, and make sure the AI’s decisions can be explained. Users, regulators, and even internal stakeholders need to understand why your system recommended something.

⁉️ Ask: Is this fair? Could it discriminate? And are we meeting regulatory requirements?

5. The Conversation Question

Can I explain this to stakeholders and defend it if something goes wrong?

This is my test for every AI feature. If I can’t clearly articulate to my CEO, my board, and a journalist exactly how this AI works and why we built it this way, we’re not ready to ship.

Practice this: “Our AI [specific function] using [data source]. It helps users [outcome]. We’ve addressed [security measures] and [ethical considerations]. If it fails, [fallback plan].”If any part of that explanation makes you uncomfortable, dig deeper before shipping!

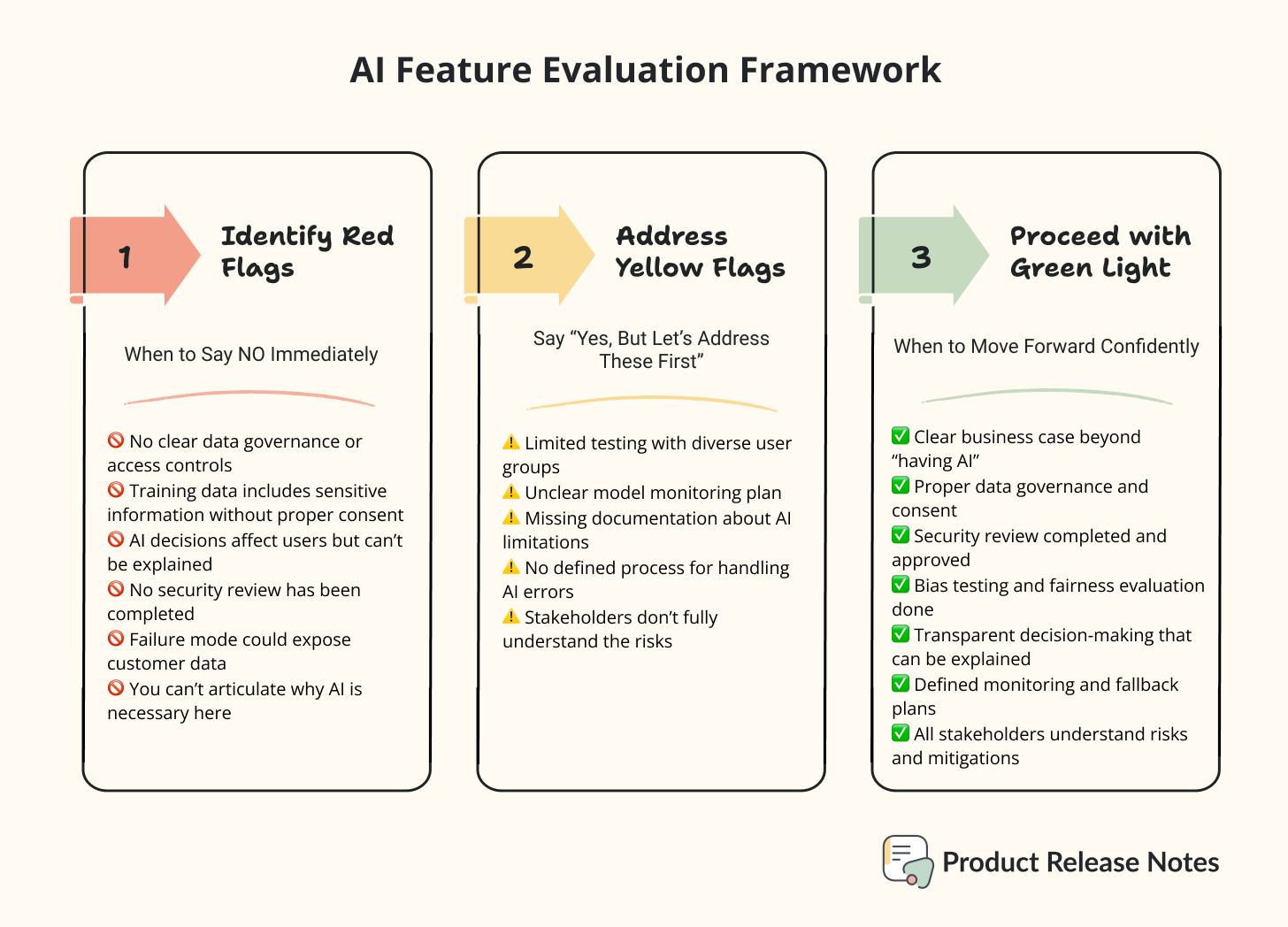

Red Flags: When to Say NO Immediately

🚫 No clear data governance or access controls

🚫 Training data includes sensitive information without proper consent

🚫 AI decisions affect users but can’t be explained

🚫 No security review has been completed

🚫 Failure mode could expose customer data

🚫 You can’t articulate why AI is necessary here

Yellow Flags: Say “Yes, But Let’s Address These First”

⚠️ Limited testing with diverse user groups

⚠️ Unclear model monitoring plan

⚠️ Missing documentation about AI limitations

⚠️ No defined process for handling AI errors

⚠️ Stakeholders don’t fully understand the risks

Green Light: When to Move Forward Confidently

✅ Clear business case beyond “having AI”

✅ Proper data governance and consent

✅ Security review completed and approved

✅ Bias testing and fairness evaluation done

✅ Transparent decision-making that can be explained

✅ Defined monitoring and fallback plans

✅ All stakeholders understand risks and mitigations

This framework isn’t about slowing down. It’s about shipping AI features that won’t blow up in your face six months later.

How to Use This Framework In The Product Management World

Cristina and I have your back with real-life examples. Let me walk you through exactly how this works using two scenarios that I’ve seen product managers face.

Scenario 1: Adding AI Chatbot to Customer Support

The request: VP of Customer Success wants an AI chatbot to handle tier 1 support questions, reduce response time, and free up the human team for complex issues.

Walking through the framework:

Business Question: Reduce average response time from 4 hours to under 5 minutes for common questions. Measurable, specific, addresses real user pain. ✅

Data Question: Needs access to knowledge base articles (public) and past support tickets (customer data). Do we have consent to train AI on customer conversations? Need to verify. ⚠️

Security Question: Where does customer data go? If using third-party AI, what’s their data retention policy? Can chatbot access customer accounts? 🚫 Until verified

Ethics Question: Will chatbot handle all users equally? What about non-English speakers? Users with disabilities? ⚠️ Needs testing

Conversation Question: “Our AI chatbot answers common questions using our knowledge base. Customer support tickets are anonymized before training. The bot can’t access customer accounts. If it can’t answer, it immediately escalates to a human.” ✅

Verdict: Yellow light. Proceed with conditions:

Anonymize training data properly

Implement strict access controls

Test with diverse user groups

Create clear escalation path to humans

Monitor for bias in responses

Scenario 2: AI-Powered Content Recommendations

The request: Marketing wants AI to recommend blog posts based on user behavior to increase engagement.

Framework check:

Business: Increase blog engagement by 30%. Clear metric. ✅

Data: Uses behavioral data (pages viewed, time spent). Already collected with consent. ✅

Security: Recommendations don’t require PII. Low risk. ✅

Ethics: Could create filter bubbles, but recommendations are suggestions, not restrictions. ⚠️ Monitor

Conversation: “Our AI suggests relevant content based on what you’ve read. You control what you see.” Simple, transparent. ✅

Verdict: Green light with monitoring plan for content diversity.

See the difference? Same pressure to ship AI features. Different outcomes because one team asked the right questions first.

Final Thoughts

The shift isn’t from “How fast can we ship AI?” to “How slowly should we move?” It’s from “How fast can we ship AI?” to “How responsibly can we ship AI fast?”

Every minute you spend evaluating an AI feature with this framework saves you hours of cleanup later. Organizations using AI and automation extensively save an average $1.9 million in breach costs and reduce the breach lifecycle by 80 days. That’s not slowing down innovation. That’s preventing expensive disasters.

This isn’t about being the product manager who says no to everything. It’s about being the product manager who says yes to the right things for the right reasons.

The dual responsibility isn’t a burden. It’s your competitive advantage. Because while your competitors are racing to ship any AI feature, you’re shipping AI features that actually work without breaking trust, security, or ethics.

Two things before you go:

And if you want to sharpen your AI product skills before 2026, join my free 24-day AI Advent Challenge starting December 1st. Daily hands-on exercises that actually make you better at this stuff → Sign up here

Would you like to improve your leadership skills and gain insight into your communication style? Subscribers get access to this evaluation system → Try it here

What’s your biggest challenge with evaluating AI features? Have you faced pressure to ship AI without proper review? Share your experience in the comments. 👇

Love this article. What depth in value!

AI PMs are struggling to strike a balance between senior management expectations and data-scientists' obsession with MMLU scores.

What they really need is to know where to use AI (and where not to), align stakeholders, and build products people actually need. These mental frameworks and checklists will be a super addition to their toolkit.

This framework is essential. Embedding ethical, security, and data considerations into every AI feature is the difference between costly failures and responsible innovation.